I recently had to troubleshoot an issue for a client who only had about 30 people in the organization with a mix of Macs and PCs notebooks/desktops accessing a single Exchange Server 2013 server with all roles installed via a mix of MAPI, IMAP4, and EWS protocols. The volume of email exchange within the organization isn’t particularly large but users do tend to send attachments as large as 40MB and Exchange is configured to journal to a 3rd party provider and therefore doubling every attachment email that is sent.

What one of the users noticed was that he would receive the following message on his Mac mail intermittently at various times of the week:

Cannot send message using the server

The sender address some@emailaddress.com was rejected by the server webmail.url.com

The server response was: Insufficient system resources

Select a different outgoing mail server from the list below or click Try Later to leave the message in your Outbox until it can be sent.

Reviewing the event logs show that when the user receives the error message above, Exchange also logs the following:

Log Name: Application

Source: MSExchangeTransport

Event ID: 15004 warning:

The resource pressure increased from Medium to High.

The following resources are under pressure:

Version buckets = 278 [High] [Normal=80 Medium=120 High=200]

The following components are disabled due to back pressure:

Inbound mail submission from Hub Transport servers

Inbound mail submission from the Internet

Mail submission from Pickup directory

Mail submission from Replay directory

Mail submission from Mailbox server

Mail delivery to remote domains

Content aggregation

Mail resubmission from the Message Resubmission component.

Mail resubmission from the Shadow Redundancy Component

The following resources are in normal state:

Queue database and disk space (“C:Program FilesMicrosoftExchange ServerV15TransportRolesdataQueuemail.que”) = 77% [Normal] [Normal=95% Medium=97% High=99%]

Queue database logging disk space (“C:Program FilesMicrosoftExchange ServerV15TransportRolesdataQueue”) = 80% [Normal] [Normal=94% Medium=96% High=98%]

Private bytes = 6% [Normal] [Normal=71% Medium=73% High=75%]

Physical memory load = 67% [limit is 94% to start dehydrating messages.]

Submission Queue = 0 [Normal] [Normal=2000 Medium=4000 High=10000]

Temporary Storage disk space (“C:Program FilesMicrosoftExchange ServerV15TransportRolesdataTemp”) = 80% [Normal] [Normal=95% Medium=97% High=99%]

Aside from seeing the pressure go from Medium to High, I’ve also seen pressure go from Normal to High:

The resource pressure increased from Normal to High.

The following resources are under pressure:

Version buckets = 155 [High] [Normal=80 Medium=120 High=200]

The following components are disabled due to back pressure:

Inbound mail submission from Hub Transport servers

Inbound mail submission from the Internet

Mail submission from Pickup directory

Mail submission from Replay directory

Mail submission from Mailbox server

Mail delivery to remote domains

Content aggregation

Mail resubmission from the Message Resubmission component.

Mail resubmission from the Shadow Redundancy Component

The following resources are in normal state:

Queue database and disk space (“C:Program FilesMicrosoftExchange ServerV15TransportRolesdataQueuemail.que”) = 77% [Normal] [Normal=95% Medium=97% High=99%]

Queue database logging disk space (“C:Program FilesMicrosoftExchange ServerV15TransportRolesdataQueue”) = 80% [Normal] [Normal=94% Medium=96% High=98%]

Private bytes = 5% [Normal] [Normal=71% Medium=73% High=75%]

Physical memory load = 67% [limit is 94% to start dehydrating messages.]

Submission Queue = 0 [Normal] [Normal=2000 Medium=4000 High=10000]

Temporary Storage disk space (“C:Program FilesMicrosoftExchange ServerV15TransportRolesdataTemp”) = 80% [Normal] [Normal=95% Medium=97% High=99%]

A bit of researching on the internet pointed me to various reasons why this would happen but one that appeared to the cause of this error in the environment was that users were sending attachments that are too large and therefore filling up the version bucket too fast for Exchange to process. One of the cmdlets a forum users suggested to check was the following:

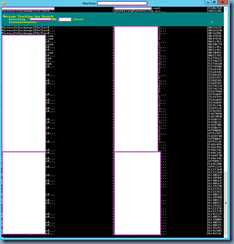

get-messagetrackinglog -resultsize unlimited -start “04/03/2014 00:00:00” | select sender, subject, recipients,totalbytes | where {$_.totalbytes -gt “20240000”}

Executing the cmdlet above displayed the following:

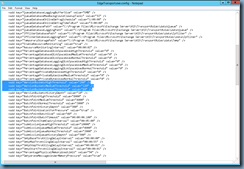

Further digging into the logs revealed that there were quite a few emails with large attachments sent around the time when the warning was logged and after consulting with our Partner Forum support engineer, I decided to go ahead and increase the thresholds that deem the pressure as being Normal, Medium or High. The keys of interest are found on the Exchange server in the following folder:

C:Program FilesMicrosoftExchange ServerV15Bin

… in the following file:

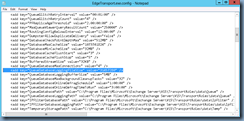

EdgeTransport.exe.config

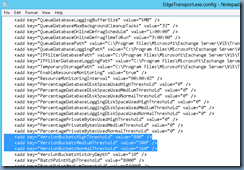

From within this file, look for the following keys:

<add key=”VersionBucketsHighThreshold” value=”200″ />

<add key=”VersionBucketsMediumThreshold” value=”120″ />

<add key=”VersionBucketsNormalThreshold” value=”80″ />

Proceed and change the values to a higher number (I simply doubled the number):

<add key=”VersionBucketsHighThreshold” value=”400″ />

<add key=”VersionBucketsMediumThreshold” value=”240″ />

<add key=”VersionBucketsNormalThreshold” value=”160″ />

Another suggested key to change was the:

<add key=”DatabaseMaxCacheSize” value=”134217728” />

… but the value on Exchange 2013 already defaults to the 512MB value so there was no need to modify it.

With these new values in pace, I went ahead and restarted the Microsoft Exchange Transport service to make the new variables take effect.

A month goes by without any complaints and logs reveal that while the version buckets have risen to the Medium threshold, it does not reach the new 400 high threshold.

Another month passes and I get another call from the same user indicating the problem has reappeared. A quick look at the logs shows that the threshold did reach the new high of 400. With no ideas left, I went ahead and opened a support call with Microsoft and the engineer basically told me the same cause and that was there is most likely a lot of large attachments being sent and thus Exchange is unable to flush the messages held in memory which results in this. I explained to the engineer that there’s only 30 people and the attachments aren’t that big so the engineer went ahead to export the tracking logs so she could review them. After about 30 minutes she said that I was right in the sense that while there are attachments but none of them exceed 40MB. At this point, she said the configuration changes we can try are the following:

- Modify the Normal, Medium, High version buckets keys

- Modify the DatabaseMaxCacheSize

- Modify the QueueDatabaseLoggingFileSize

- Modify the DatabaseCheckPointDepthMax

- Set limits on send message sizes

- Increase the memory on the server

After reviewing the version bucket thresholds I’ve set when I doubled the default values, she said we don’t need to increase them further. The questions I immediately asked was whether I could if I wanted to and she said yes but we could run the risk of causing the server to crash if it’s set to high that the server runs out of memory.

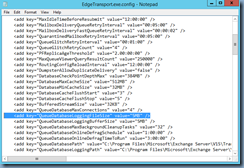

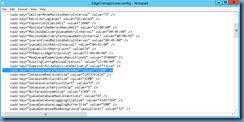

The DatabaseMaxCacheSize key was unchanged at 512MB so she asked me to change it to:

<add key=”DatabaseMaxCacheSize” value=”1073741824” />

The <add key=”QueueDatabaseLoggingFileSize” value=”5MB” />:

… was changed to <add key=”QueueDatabaseLoggingFileSize” value=”31457280″ />:

The <add key=”DatabaseCheckPointDepthMax” = value=”384MB” />:

… was changed to <add key=”DatabaseCheckPointDepthMax” = value=”512MB” />:

Next, she used the following cmdlets to review the message size limits currently set:

Get-ExchangeServer | fl name, admindisplayversion, serverrole, site, edition

Get-Transportconfig | fl *size*

Get-sendconnector | fl Name, *size*

Get-receiveconnector | fl Name, *size*

Get-Mailbox -ResultSize Unlimited | fl Name, MaxSendSize, MaxReceiveSize >C:mbx.txt

Get-MailboxDatabase | FL

Get-Mailboxdatabase -Server <serverName> | FL Identity,IssueWarningQuota,ProhibitSendQuota,ProhibitSendReceiveQuota

Get-Mailbox -server <ServerName> -ResultSize unlimited | Where {$_.UseDatabaseQuotaDefaults -eq $false} |ft DisplayName,IssueWarningQuota,ProhibitSendQuota,@{label=”TotalItemSize(MB)”;expression={(get-mailboxstatistics $_).TotalItemSize.Value.ToMB()}}

She noticed that I’ve already set limits on the mailbox database but wanted me to sent all of the other connectors and some other configurations to have 100MB limits by executing the following cmdlet:

Get-Transportconfig | Set-Transportconfig -maxreceivesize 100MB Get-Transportconfig | Set-Transportconfig -maxsendsize 100MB Get-sendconnector | Set-sendconnector -MaxMessageSize 100MB Get-receiveconnector | set-receiveconnector -MaxMessageSize 100MB Get-TransportConfig | Set-TransportConfig -ExternalDSNmaxmessageattachsize 100MB -InternalDSNmaxmessageattachsize 100MB Get-Mailbox -ResultSize Unlimited | Set-Mailbox -MaxSendSize 100MB -MaxReceiveSize 100MB

The last item that we could modify was the memory of the server but as there was already 16GB assigned, she said we could leave it as is for now and monitor the event logs over the next while. It has been 3 weeks and while the version bucket has reached medium, it has been consistently 282 or less than the 400 High threshold set.

Troubleshooting this issue was quite frustrating for me as there wasn’t really a KB article with clear instructions for all these checks so I hope this post will help anyone out there who may experience this issue in their environment.

2 Responses

Terence,

Long time, no text or chat since we worked downtown in Toronto….

Have you suggested they use a third party application for transferring files that has a plug-in to Exchange/Outlook? Exchange is not an FTP server and unless Microsoft integrates some other functionality, is not tuned for it.

I understand it's hard to convey this message to C levels when Microsoft spouts their 'Collaboration' marketing.

People such as you and I are stuck in the middle trying to make it all work. 😉

Hey,

Did the problem come back? I'm experiencing the same issue and want to know if you were able to fix it at all.