I was out to a client’s site to close off a project with a list of items that I wrote down to completed. As 3:30p.m. came around the corner and everything was happily chugging along, I started writing a status report for my PM:

|

Task |

Details |

Status |

Completed On |

|

Redeploy vCenter on 64-bit OS and with full SQL Server |

We are currently still using the preproduction vCenter which resides on a 32-bit Windows 2008 R1 OS (it was incompatible with R2 when we originally deployed it). |

Completed |

July 9th, 2010 |

|

Configure VUM (Update Manager) |

Update manager has not been configured and will be installed and configured after the new vCenter has been deployed. |

Completed |

July 9th, 2010 |

|

Cisco UCS with vSphere Distribute Power Management |

The Cisco UCS IPMI profile has not been configured with vSphere Distributed Power Management. |

Completed |

July 9th, 2010 |

|

Task |

Details |

Status |

Scheduled |

|

Update ESX servers to update 2 |

The UCS blades currently have ESX 4.0 Update 1 installed and will need to get updated. |

Incomplete |

TBD |

Other than the last item I had on my list which was incomplete because there were 2 SQL servers configured with MSCS that could not be taken offline, everything was going according to schedule.

An important note I’d like to make before I continue is that the client requested vCenter to use another SQL instance on a separate virtual machine and I was fine with it so I actually went ahead and deployed a new virtual machine from a pristine template of Windows Server 2008 R2 I deployed according to best practices (locked down domain accounts for service accounts). More on this a bit later.

So come 4:00p.m., I figure I should start to pack up and head out to a dinner I planned but as the time ticked off to 4:08p.m., everything started falling apart. The northbound connection from fabric interconnect B went down. We’ve been experiencing this problem for the past few weeks and had just swapped out both switches last week and thought it was fixed.

I went ahead and tried to reseat the x2 module and it came back up but then a few minutes later, fabric interconnect A’s link went down. What’s different than what we’ve experienced prior to swapping out the x2 modules was that now both links would go down one after another. What was also different was that now we would have a fabric interconnect labeled as “subordinate” would not have an active link while our “primary” fabric interconnect would have it’s northbound link down.

Seeing how one of the links would keep going down one after another, I figure we’ll just have to contact Cisco at some point so I went ahead and putty-ed into the primary fabric interconnect and reboot it so it becomes the subordinate. I know I didn’t have to because the redundant link would continue working and I wished I didn’t because once I rebooted it, our virtual machines suddenly lost connectivity to each other. I got cut off from vCenter and could not connect.

I managed to get onto the host directly via it’s service console IP and logged into vCenter’s console. It wasn’t going to start because it simply couldn’t contact the SQL server which was on another server. I couldn’t ping the other server but I can see that the vmnic0 was up (vmnic1) was down. I didn’t start panicking yet and figure I’ll just remove that virtual machine from inventory and add it onto the same host as the SQL server.

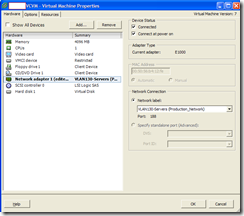

I successfully complete the operation as described above and when I logged into the vCenter and tried to start the service but it wouldn’t start. Then I noticed that within Windows, the NIC had a red X beside it. Being a Windows guy, I knew the NIC was disconnected so I went into VI Client to check the check box.

Long behold the checkbox was unchecked, no worries, let’s check it and hit OK. Umm, what’s this?

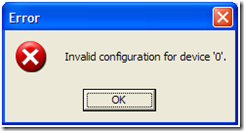

Why now? Ok, let me do a goggle search. Interesting, a known problem. No worries, I’m a smart guy, I can outsmart this bug:

Umm, I guess I wasn’t that smart:

Note to self and remember this for the future:

<If your vCenter is down, you cannot modify the switch>

Terence: Yes I knew this.

<Yes but did you know you can’t map existing port groups either?>

Terence: No, I did not.

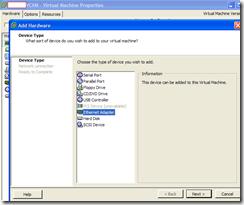

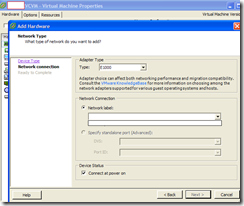

So this was when I started to panic. Dinner plans in 1 hour, what do I do? Why don’t I try to create a new vSwitch?

Yikes, almost forgot this was a UCS with a Menlo card and thus I have 2 vmnics to work with. No problem, I can remove 1 of them right? No, because you lost 1 link!

Now that everything that can possibly go wrong has gone wrong, I’m going to remove my humor and explain what I ended up doing:

Seeing how 1 was down and I cannot edit these dvSwitches from console, here’s what I did:

1. Create a new vSwitch with no vmnics mapped to it.

2. Remove one of the physical adapter that’s down.

3. Assign adapter to the vSwitch.

4. Recreate the port groups you need:

a) virtual machine network

b) service console

Ok, so now we have a broken adapter assigned to a regular vSwitch. Just as I was about to ask and reseat the x2 module, I was told that they didn’t want to do this because the client wasn’t comfortable with anything being done to the UCS until Cisco determines what the problem is (I got the client to call Cisco and troubleshoot).

I proceeded to this the following (no screenshots from here on as I was in a rush):

5. You cannot edit the dvSwitches from the console so you have to unmap the left over active vmnic from the dvSwitch. This will cut you off but at least you will get the vmnic back so go ahead and do it.

6. Log into UCSM or the KVM manager and go directly to the console.

7. Log in, use esxcfg-vswif, esxcfg-nics, esxcfg-vswitch to remap the vmnic to the regular vSwitch.

8. What I noticed was that even after doing so, I was unable to VI Client to the service console IP so I rebooted the server. Once the server was up, I managed to VI Client into the server, modify the NIC settings for the vCenter and SQL virtual machine to be on the regular vSwitch, get vCenter back up (vCenter and SQL are on the same host now).

What an ordeal. This still doesn’t solve the virtual machines not being able to ping each other across ESX hosts YET if I console into any of the ESX hosts, i can ping each other (hence no isolation mode and why the virtual machines haven’t shut off). Strange. I won’t elaborate on how I solved this problem because I haven’t yet. I went back in the next day on Saturday and tried removing the dvSwitch and all of the port groups, recreated the dvSwitch but still cannot establish connection. Since I had little time on Saturday, I ended up re-creating regular vSwitches to get the infrastructure back up. I will try to schedule some time in the following weeks to open a VMware support case to get this resolved and will post back.

Harsh lessons learned:

1. I really hope VMware changes the design of dvSwitches and:

a) allow modifying them through the console and GUI even if vCenter is down.

b) allow you to map dvSwitch port groups even if vCenter is down.

2. Go hybrid model and leave the service console on a regular vSwitch in the future (you cannot do this with UCS and a menlo card).

3. If licensing is not an issue, deploy SQL on the vCenter server.

4. NEVER EVER EVER touch the NIC properties if vCenter is down. See bug mentioned above.

5. Think twice before removing virtual machines from inventory if vCenter is down. See bug mentioned above.

I hope this helps someone in the future if they ever encounter the same problem as I did. I will blog the resolution to the northbound connection to the 3750e switches when we get that sorted out (https://blog.terenceluk.com/problem-with-ucs-northbound-connection/) and the dvSwitch issue when I get that resolved either by myself or through VMware support.

As a final note just in case I’m sending off the vibe that I may not be happy with my experiences with UCS, I’d like to make it clear that I’ve had a blast deploying this infrastructure and would highly recommend this to clients. The issues I encountered here are simply bugs and if we had used another type of x2 extender, we would have never encountered this.

As for vCenter’s new distributed switches, I still like the idea and I’m sure VMware would address the design in the next release.