As per one of my previous posts, I updated a UCS’ firmware from 1.3(1c) to 1.3(1n) (See post here: https://blog.terenceluk.com/updating-cisco-ucs-firmware-from-131c/) as I needed to 2 blades from the chassis for a VIEW deployment. Before I begin writing about the problem, let me give a bit of background with how the blades were configured:

- Multiple service profile templates were created.

- Local storage policy was configured as RAID-0 (Stripped) because many consultants used this UCS as a lab and SAN storage was not available when we originally put the chassis in.

- No one really manages this environment so this environment can be a mess at times (i.e. Blade server not acknowledged after it was moved: https://blog.terenceluk.com/update-status-for-cisco-ucs-blade/).

Our practice lead originally wanted the environment to be cleaned up but due to the change in priorities, he said the time we have should be spent on getting VIEW 4.5 up instead for us to test so right after I successfully updated the firmware, I proceeded to configure two blades as 2 ESXi 4.1 hosts for the VIEW environment. I didn’t want to waste time in getting access to the NetApp and having to carve out aggregates, volumes and LUNs so I decided to start the deployment on the local storage.

Since the content on the 2 blades’s local hard disk had old data that wasn’t important, I decided to reconfigure them as RAID-1 instead of RAID-0. The following lists the steps that I completed:

- Create a new service profile template with RAID-0 as the local storage policy.

- Create 2 service profiles deriving them from the template created above.

- Associate service profile to the 2 blade servers.

Pretty straight forward right? Not quite as I noticed that as soon as I assign the service profile to the blades I receive the following error:

Affected object: org-root/org-….

Description: Service profile <someName> configuration failed due to destructive-local-disk-config,insufficient-resources

ID: 6166594

Cause: configuration-failure

Code: F0327

Original severity: major

Previous severity: major

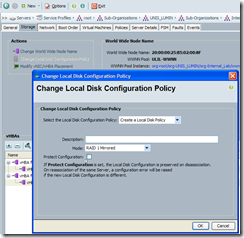

After spending a bit of time troubleshooting this error and not going anywhere, I went ahead and searched on the Internet to see if anyone has encountered this problem. While searching, I stumbled upon this blog post: https://www.colinmcnamara.com/fixing-ucs-config-failures-due-to-local-disk-config-requirements/. Colin basically states that the problem was related to the Protect Configuration setting that protected the disk’s configuration if the service profile associated to the blade was removed:

If Protect Configuration is set, the Local Disk Configuration is perserved on disassociation.

On reassociation of the same Server, a configuration error will be raised if the new Local Disk Configuration is different.

Note: This is not the actual policy applied but I included this screenshot to show the check box.

Colin also states that there was a bug stated in the 1.3 firmware release notes with “no workaround” but while the Cisco engineer wasn’t able to help him, he was able to get around it by:

- Create a new local disk policy with the proper RAID.

- Apply the old policy to the blade with the policy he didn’t want.

- Apply the new policy with the configuration he wanted.

I went ahead and gave this a shot only to continually have it fail at the same point.

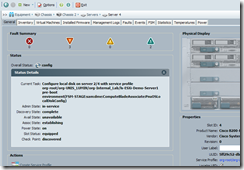

[FSM:STAGE:RETRY:]: Configure local disk on server 2/4 with service profile …… pre-boot environment(FSM-STAGE:sam:dme:ComputeBladeAssociate:PnuOSLocalDiskConfig)

Description: [FSM:STAGE:REMOTE-ERROR]: Result: service-unavailable Code: unspecified

Message: Error Configuring Local Disk

Controller(sam:dme:ComputeBladeAssociate:PnuOSLocalDiskConfig)

ID: 6166812

Cause: pnuoslocal-disk-config-failed

Code: F77961

Original severity: warning

Previous severity: cleared

What sort of suspected was that the original policy for the local disk configuration was deleted some time ago and therefore no matter which policy I set, this workaround didn’t work. After trying every combination I could think of through disassociating and reassociating the service profiles (it takes a long time to do this routine), I gave up on trying Colin’s workaround and decided to try something else.

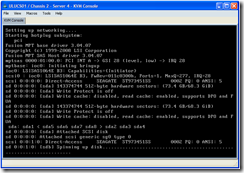

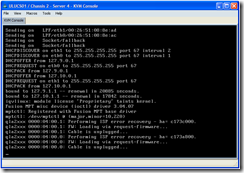

The something else I had in mind was to simply pull out the 2 local hard disks and plugging them back in to the other disk’s slot. By this I mean disk in slot 1 will be put into slot 2 and vice versa. The content on the drive did not matter to me since they’re ESXi installs and I was going to blow them away anyways so this seemed like the logical step to try. Here are the steps that finally got it to work:

1. Disassociate service profile from blade.

2. Re-acknowledge the blade (tried to skip this step for the 2nd blade and that didn’t work).

3. Associate new service profile with new local disk policy to blade.

The steps above appeared to do the trick and now I have 2 blades that are configured with RAID-1 as their local disk policy. Since I’m in a rush to move on to the VIEW 4.5 deployment, I will most likely have to revisit this in a few weeks to determine if there are any other workarounds.

One Response

Thanks for the great help