To continue from my previous post:

Configuring a Citrix ADC / NetScaler to provide AD FS (Active Directory Federation Services) WAP (Web Application Proxy) service

https://blog.terenceluk.com/configuring-citrix-adc-netscaler-to/

I had completed the configuration of a virtual server that directed external inbound AD FS traffic via SSL_Bridge to the Windows Server 2019 AD FS WAP server and another content switching server that responded to external inbound AD FS traffic via SSL (perform the functionalities of a Windows Serve 2019 AD FS WAP server). The next step was to load balance the two but there did not seem to be a way to configure a Content Switching Virtual Server as a Backup server to a Load Balancing Virtual Server because the intention was to have the Windows Server 2019 WAP server provide AD FS sign in services and only failover to the Citrix ADC WAP in the event of a failure. Another challenge I had was that the AD FS health probe required port 80 to be opened for the Azure Traffic Director to monitor the health of the AD FS service. Attempting to try and allow the Traffic Manager to monitor the health of the AD FS servers from the WAP to the internal farm has been a top of discussion in other blog posts but for this configuration, the Citrix ADC already monitors the AD FS servers so all I needed was really to allow the Azure Traffic Manager to monitor the WAP services presented by the Citrix ADC.

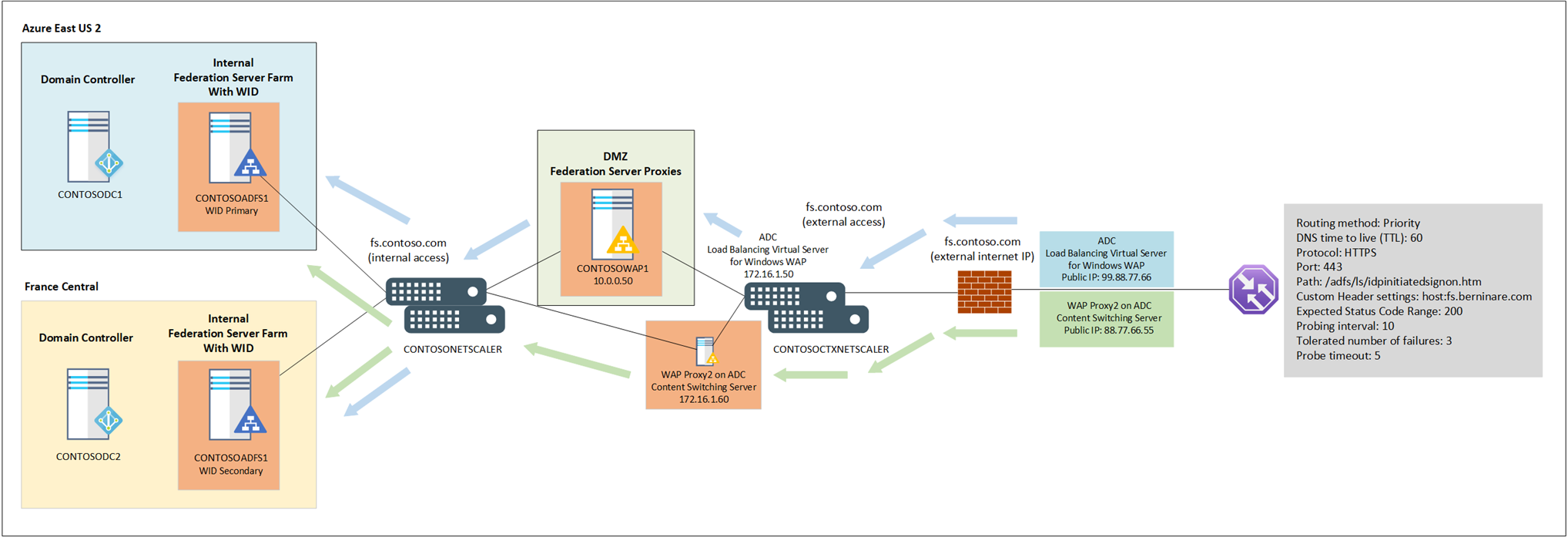

With the above state, the following is what the topology looks like:

Note the following design features:

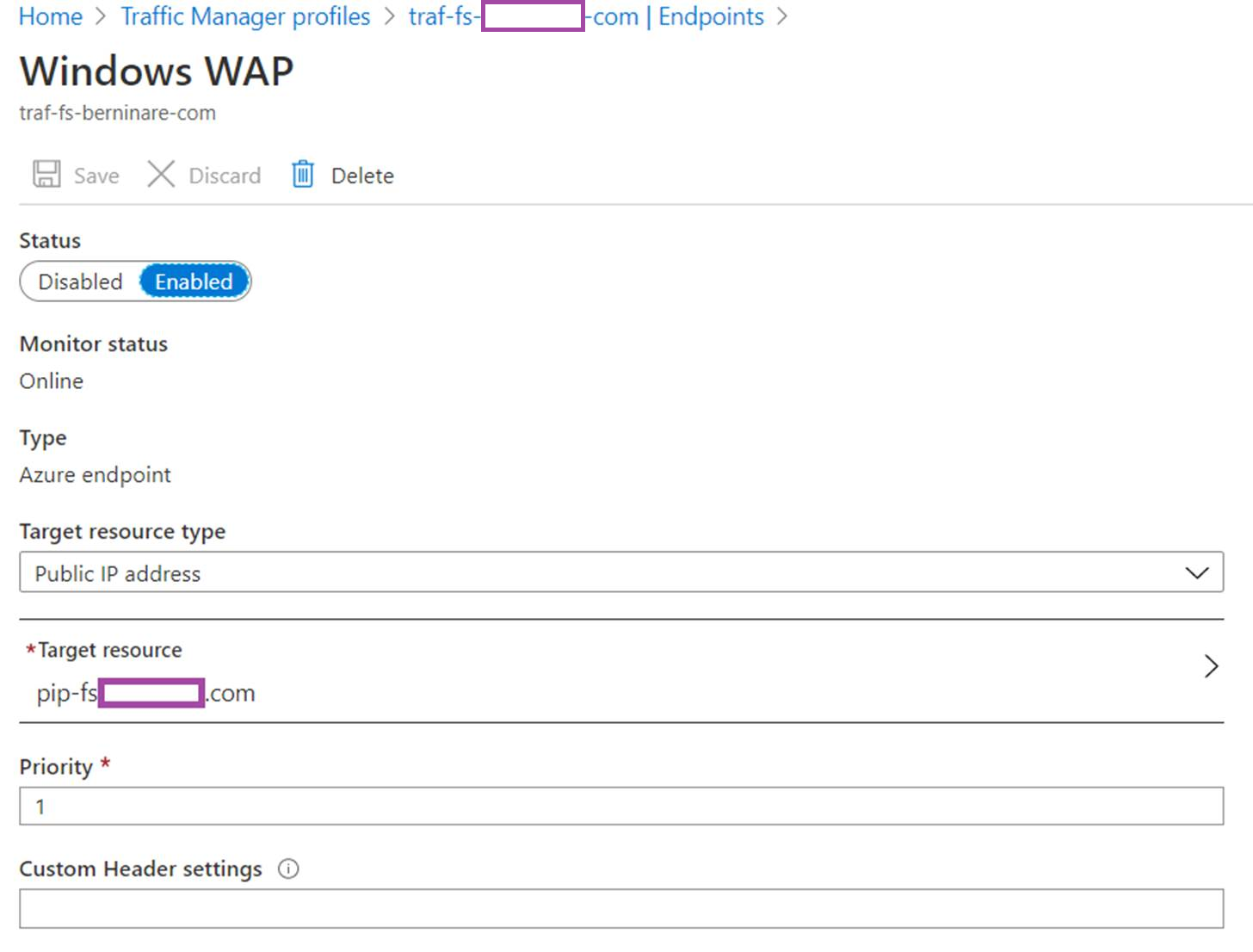

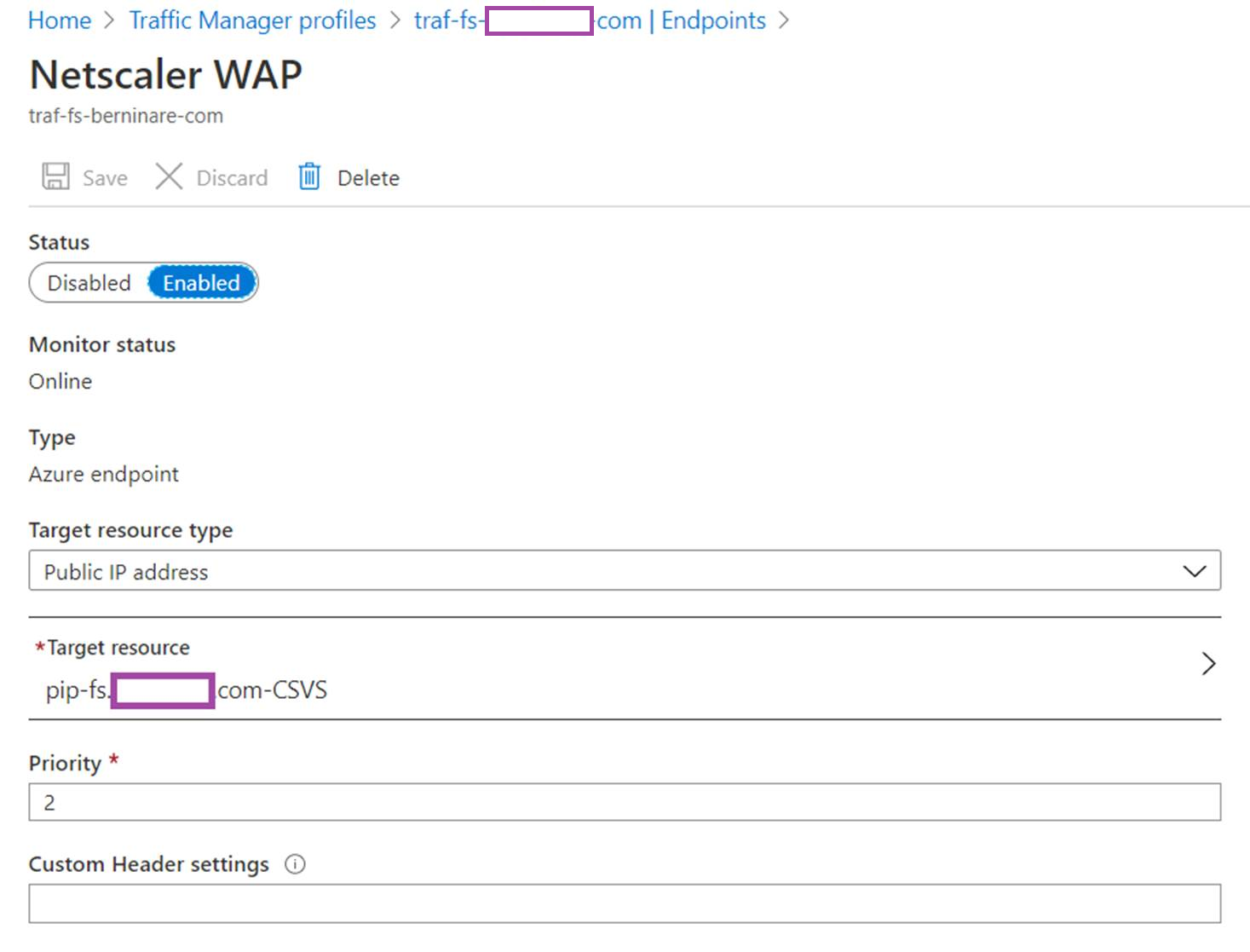

- There are two public IP addresses, 1 represents the Windows Server 2019 AD FS WAP and the other one represents the Content Switching Server WAP on the Citrix ADC.

- The Azure Traffic Manager has the routing method configured as priority, which allows all traffic to be directed to the Windows Server 2019 WAP and only two the Content Switching Server WAP on the Citrix ADC when the Windows Server 2019 WAP is unavailable.

- Health probes are configured on the Citrix ADC to monitor the Windows AD FS servers’ health via port 80 /adfs/probe.

- The idpinitiatedsignon.htm page is enabled in the AD FS farm so it is publicly accessible via

- The Azure Traffic Manager will deem the AD FS portal as being unavailable if the idpinitiatedsignon.htm page is not reachable.

Configuration of the Azure Traffic Director

The following screenshots are the configuration parameters used for the Azure Traffic Manager:

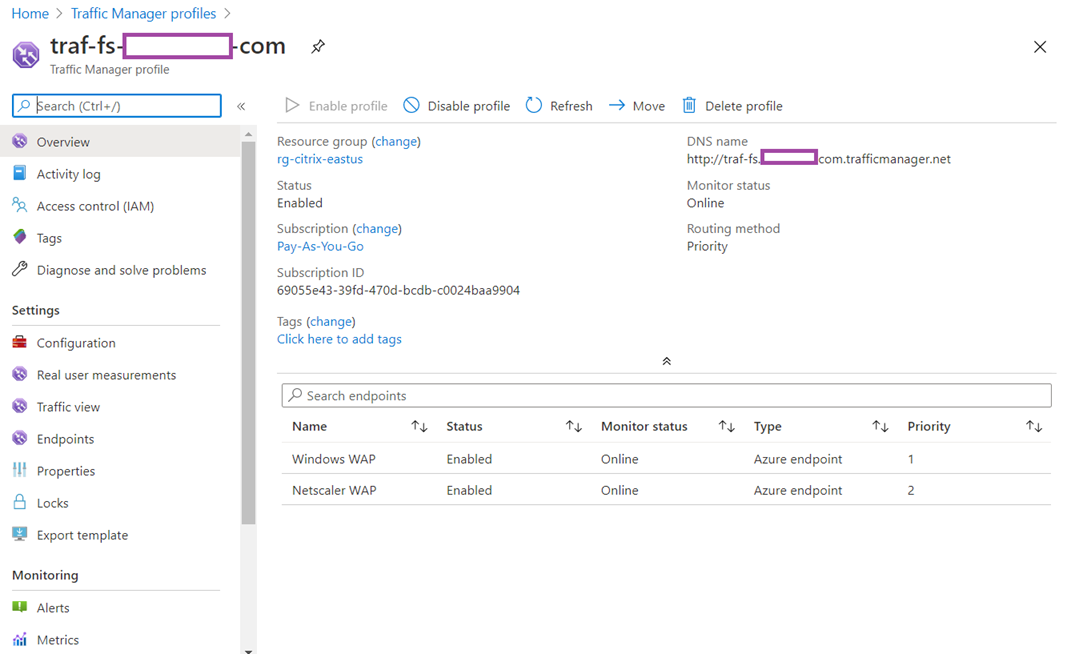

Overview:

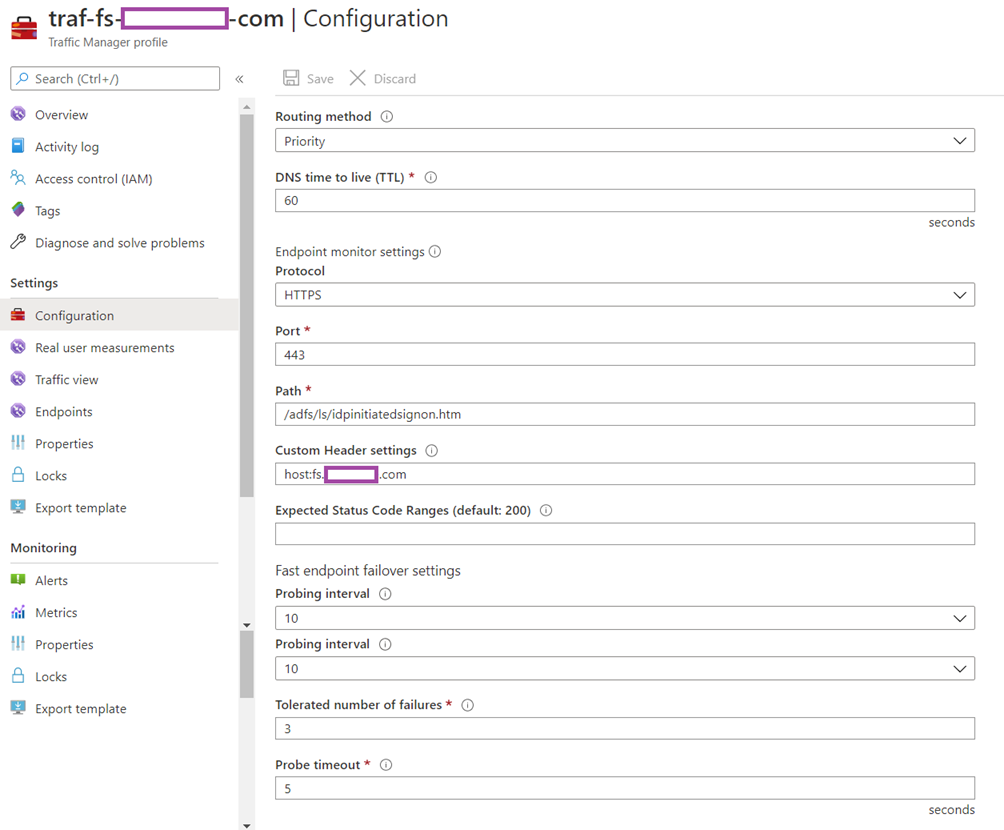

Configuration: Routing method: Priority

DNS time to live (TTL): 60

Protocol: HTTPS

Port: 443

Path: /adfs/ls/idpinitiatedsignon.htm

Custom header settings: host:fs.berninare.com

Expected Status Code Ranges (default: 200): <blank> defaults to 200

Probing interval: 10

Tolerated number of failures: 3

Probe timeout: 5

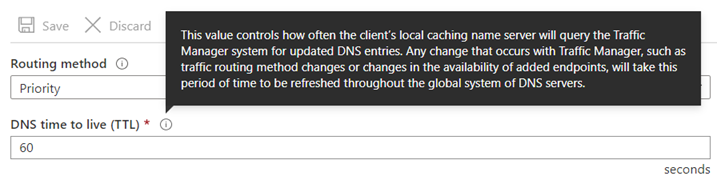

Most of the configuration parameters are self-explanatory but the one I want to note is the DNS time to live (TTL) which has the following description:

A more in depth explanation can be found here: https://docs.microsoft.com/en-us/azure/traffic-manager/traffic-manager-faqs#what-is-dns-ttl-and-how-does-it-impact-my-users

What is DNS TTL and how does it impact my users?

When a DNS query lands on Traffic Manager, it sets a value in the response called time-to-live (TTL). This value, whose unit is in seconds, indicates to DNS resolvers downstream on how long to cache this response. While DNS resolvers are not guaranteed to cache this result, caching it enables them to respond to any subsequent queries off the cache instead of going to Traffic Manager DNS servers. This impacts the responses as follows:

- a higher TTL reduces the number of queries that land on the Traffic Manager DNS servers, which can reduce the cost for a customer since number of queries served is a billable usage.

- a higher TTL can potentially reduce the time it takes to do a DNS lookup.

- a higher TTL also means that your data does not reflect the latest health information that Traffic Manager has obtained through its probing agents.

As described above, a higher the value of the TTL reduces cost, reduces time for DNS lookups but at the cost of the results not reflecting the health of the AD FS WAP server. I’ve found that a value of 60 seconds was acceptable for the period it takes to failover to the secondary Citrix ADC WAP but please be cognizant of the increase in cost if this is a heavily accessed service.

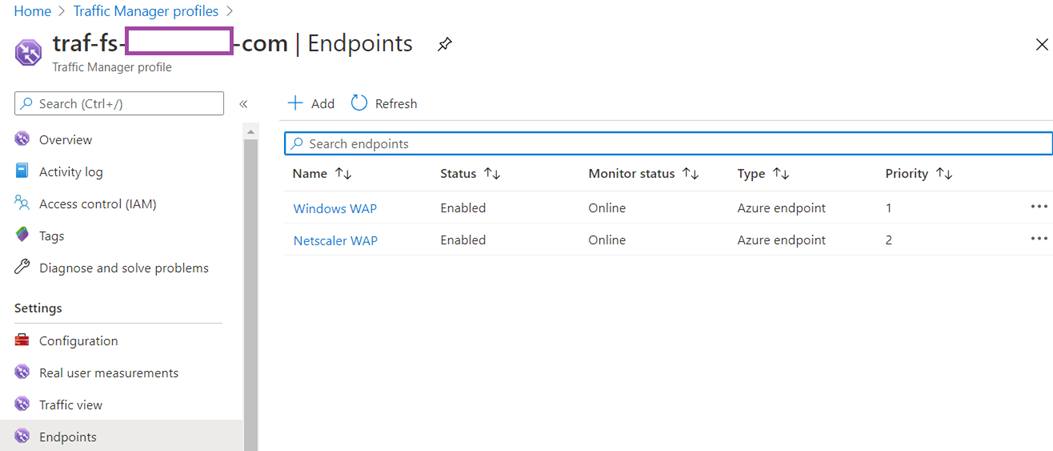

Endpoints:

Hope this helps anyone who may be looking to configure their AD FS external access similar to the what I’ve put in place. I am also always open to suggestions and corrections if there are any issues with this configuration.