As a follow up to one of my previous posts:

Using a Logic App to automate the copying of files that are uploaded to a Storage Account to SharePoint Online

One of the asks I’ve received for this setup in the past was whether there was a way to monitor the inbound files to the Azure Storage account and send out alerts or reports at a specified window. An example of a specific business use case could be:

Scenario

- Let’s say this Azure Storage Account is configured as an SFTP service where a partner of a company uploads files each month.

- The Logic App in my previous post will then copy the files to SharePoint and alert the business users of inbound files.

- Each of the various folders are expected to receive files on a monthly basis at the beginning of the month.

- The uploaded files are then used for a business process at the a specific amount of days after the start of the month.

Problem

- The organization has noticed that there are times when the partner has not uploaded the files within the expected window at the beginning of the month.

- If the business process is executed on the 3rd day of the beginning of the month and the file is not uploaded, the team would scramble to ask the partner for the missing file(s) on the 4th day.

Solution

- The ask from the organization is whether there is a way to set up monitoring on the folders that will send out an alert if no files are uploaded.

- As an example, if a folder is expected to receive a file within the first 3 days of the month, an alert will be sent to the team every day on each of the first 3 days of the month to indicate no files have been uploaded.

- The automation should run on the 1st, 2nd, 3rd, and 4th (the additional day is to check for the last 3rd day) of each month.

There are various ways to monitor and alert based on these requirements and example I will provide in this post is with the use of a Log Analytics and Logic App. The Log Analytics will log all blob diagnostic events performed on the storage account, while the Logic App will query these logs on a daily recurring schedule the determine whether files have been uploaded.

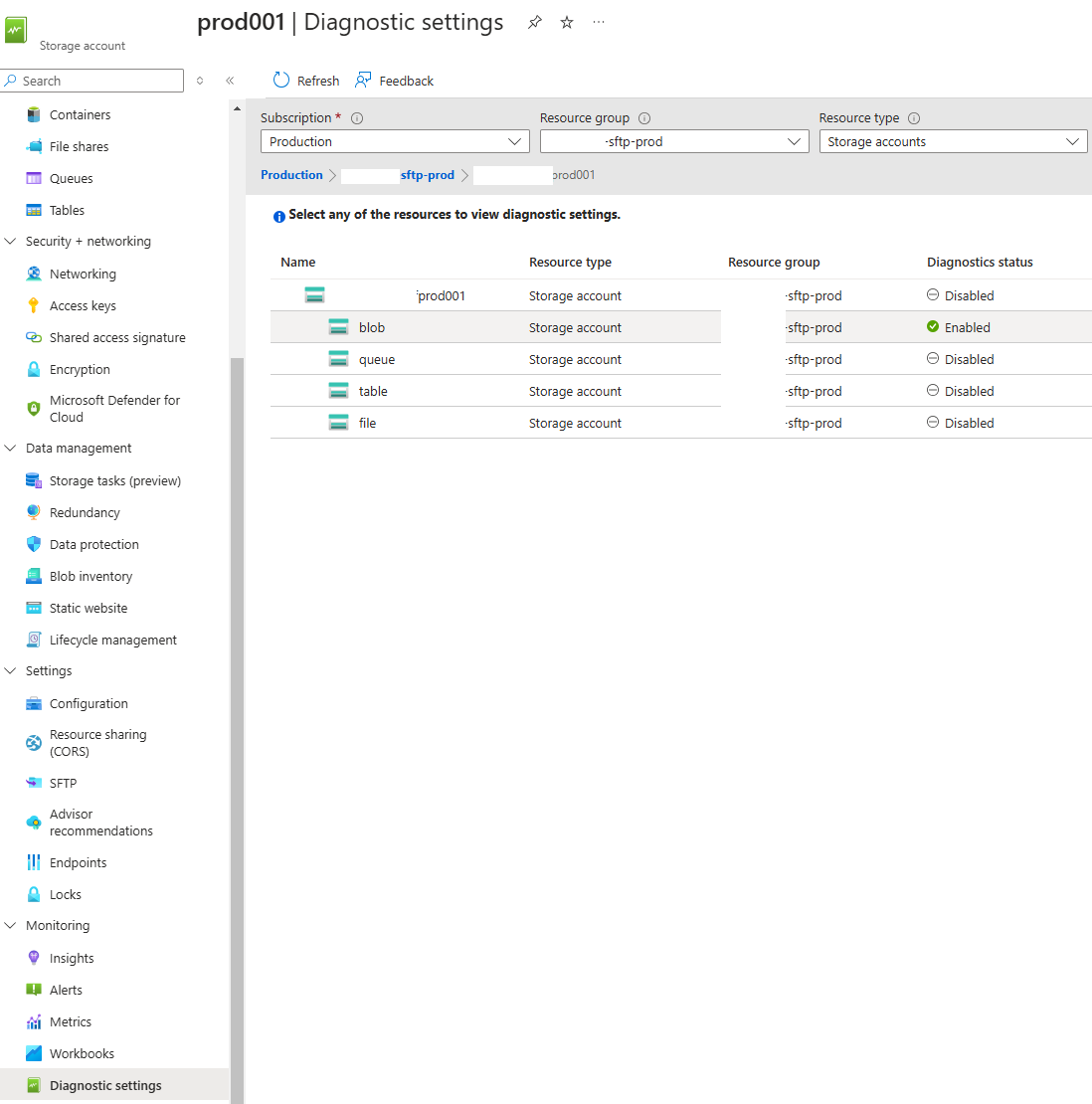

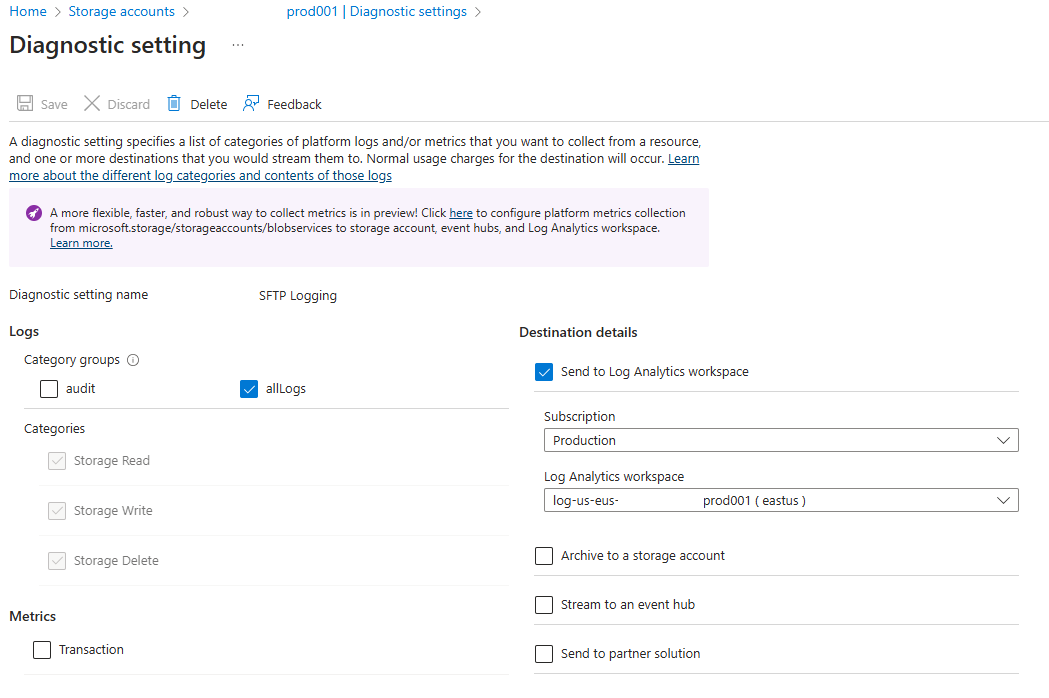

Step #1 – Set up Diagnostics Settings on Storage Account

The first step is to ensure that Diagnostic Settings is configured for the storage account:

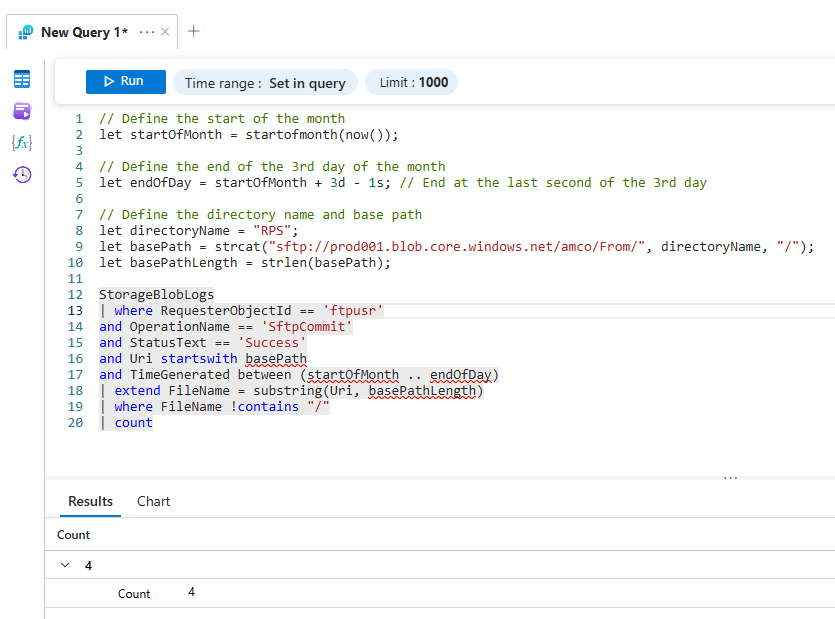

Step #2 – Create Log Analytics Workspace KQL Query for results

Next, we’ll need to build a KQL query to query the Log Analytics workspace logs to determine whether files have been uploaded to the folder during this month (not any of the months before). Before building out the query, let’s state the container and folder structure for the Storage Account as well as the expected days the files should be uploaded.

Container Name: amco

Folder Structure: From/RPS

Expected Inbound Files Schedule: Files should be uploaded via SFTP on the 1st, 2nd, and 3rd day at the start of the month.

With the above defined, the KQL query we’ll be using is the following:

// Define the start of the month

let startOfMonth = startofmonth(now());

// Define the end of the 3rd day of the month

let endOfDay = startOfMonth + 3d – 1s; // End at the last second of the 3rd day

// Define the directory name and base path

let directoryName = “RPS”;

let basePath = strcat(“sftp://prod001.blob.core.windows.net/amco/From/”, directoryName, “/”);

let basePathLength = strlen(basePath);

StorageBlobLogs

| where RequesterObjectId == ‘ftpusr’

and OperationName == ‘SftpCommit’

and StatusText == ‘Success’

and Uri startswith basePath

and TimeGenerated between (startOfMonth .. endOfDay)

| extend FileName = substring(Uri, basePathLength)

| where FileName !contains “/”

| count

The KQL query above will query the Log Analytics workspace to determine whether any files were uploaded within the first 3 days of the month and output the amount of files uploaded (handled by the | count at the end of the query). The parameters can be adjusted to the directory, base path, and the amount of day variables as required for any other environment with a different folder structure.

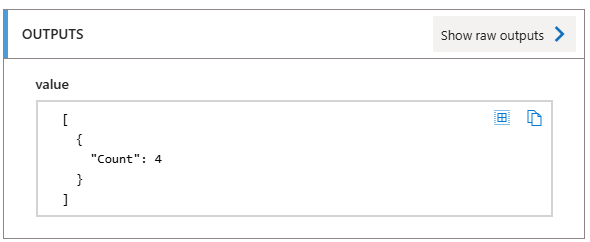

Here is a sample of what the query would return:

The | count can be removed for a more verbose output of the specific files.

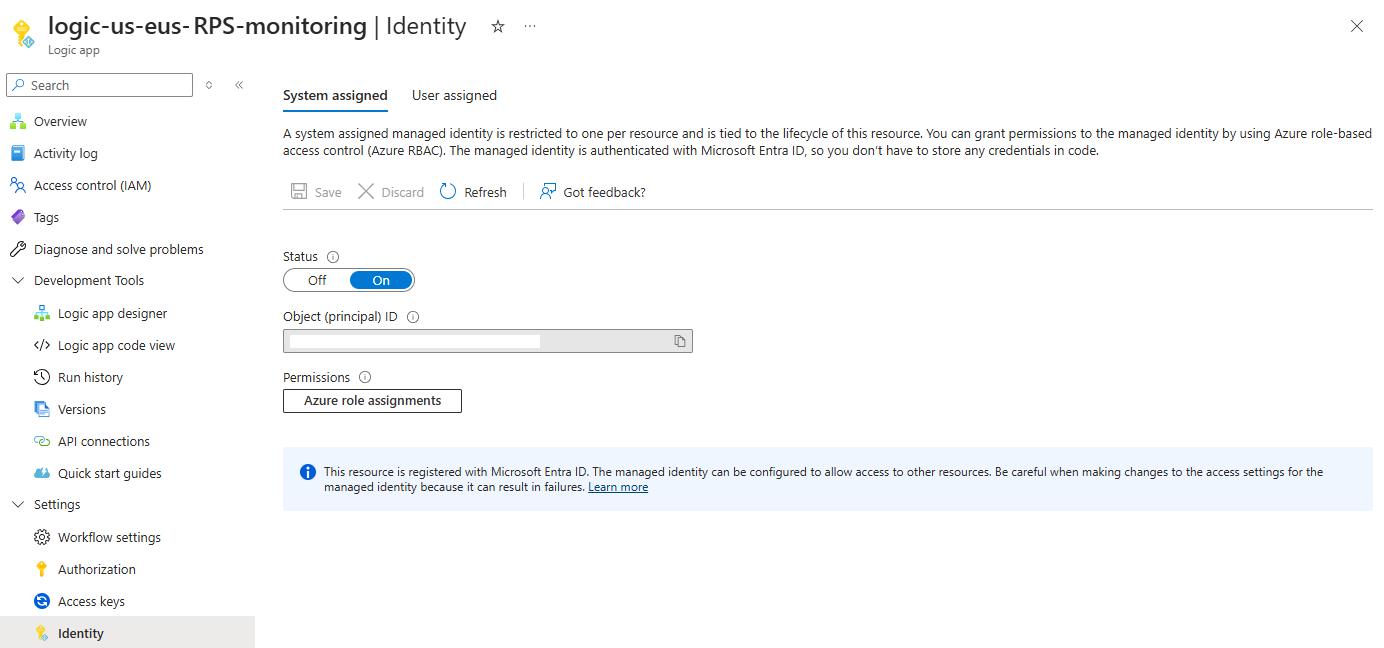

Step #3 – Set up Logic App System Managed Identity for Monitoring the folder

Proceed to create a new Logic App, turn on the System assigned managed identity:

Grant the Logic App‘s system managed identity the Log Analytics Reader role for the Log Analytics Workspace:

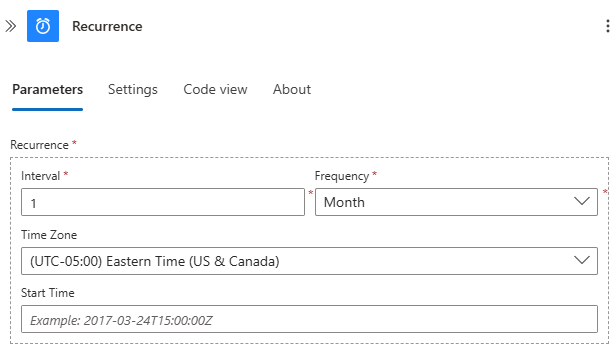

Step #4 – Set up the Recurrence schedule trigger for the Logic App

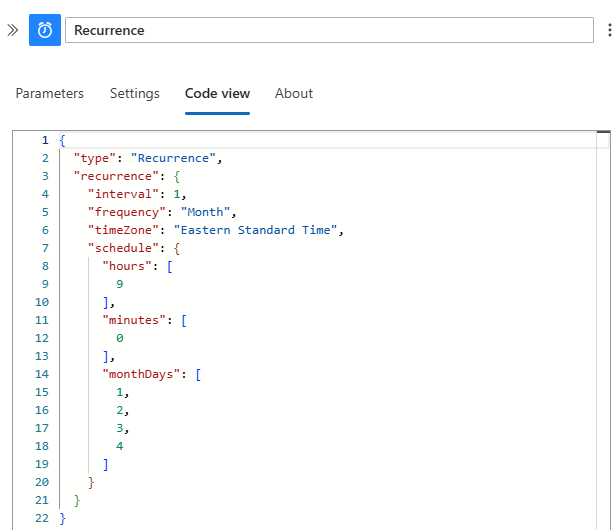

The flow of the Logic App‘s actions will look as such:

There isn’t a way to configure the recurrence to run the Logic App on the first 4 days of the month via the graphical user interface of the Logic App designer so we’ll be using the JSON definition as described in the following Microsoft documentation:

Note that the example in the documentation demonstrates how to schedule the run on the last day of the month. The JSON for this example has been updated to run on the first 4 days of the month at 9AM EST.

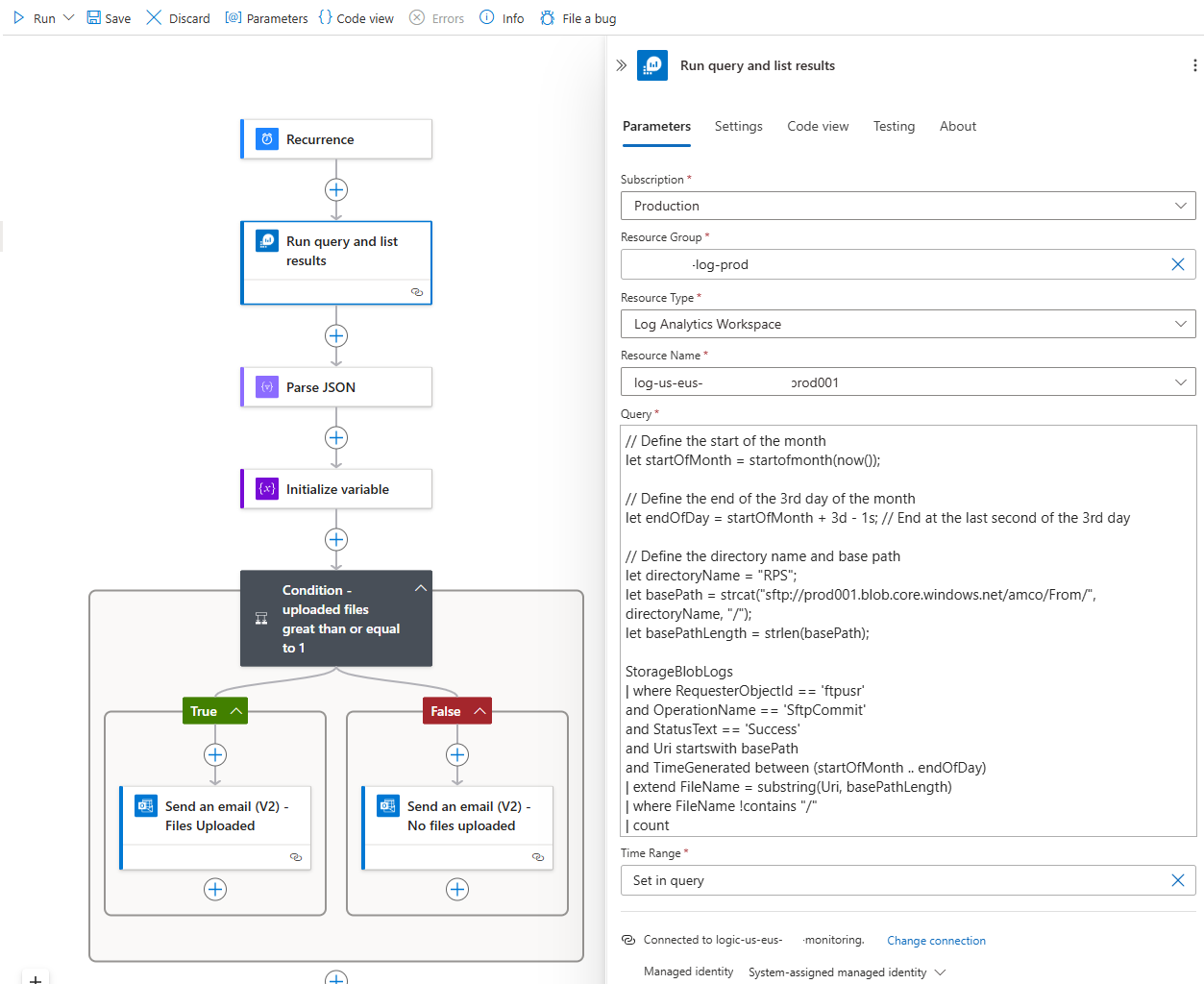

Step #5 – Run a KQL query against the Log Analytics workspace to determine whether files were uploaded

We’ll now add the action Run query and list results to query the Log Analytics workspace for the amount of files uploaded to the specified folder:

// Define the start of the month

let startOfMonth = startofmonth(now());

// Define the end of the 3rd day of the month

let endOfDay = startOfMonth + 3d – 1s; // End at the last second of the 3rd day

// Define the directory name and base path

let directoryName = “RPS”;

let basePath = strcat(“sftp://prod001.blob.core.windows.net/amco/From/”, directoryName, “/”);

let basePathLength = strlen(basePath);

StorageBlobLogs

| where RequesterObjectId == ‘ftpusr’

and OperationName == ‘SftpCommit’

and StatusText == ‘Success’

and Uri startswith basePath

and TimeGenerated between (startOfMonth .. endOfDay)

| extend FileName = substring(Uri, basePathLength)

| where FileName !contains “/”

| count

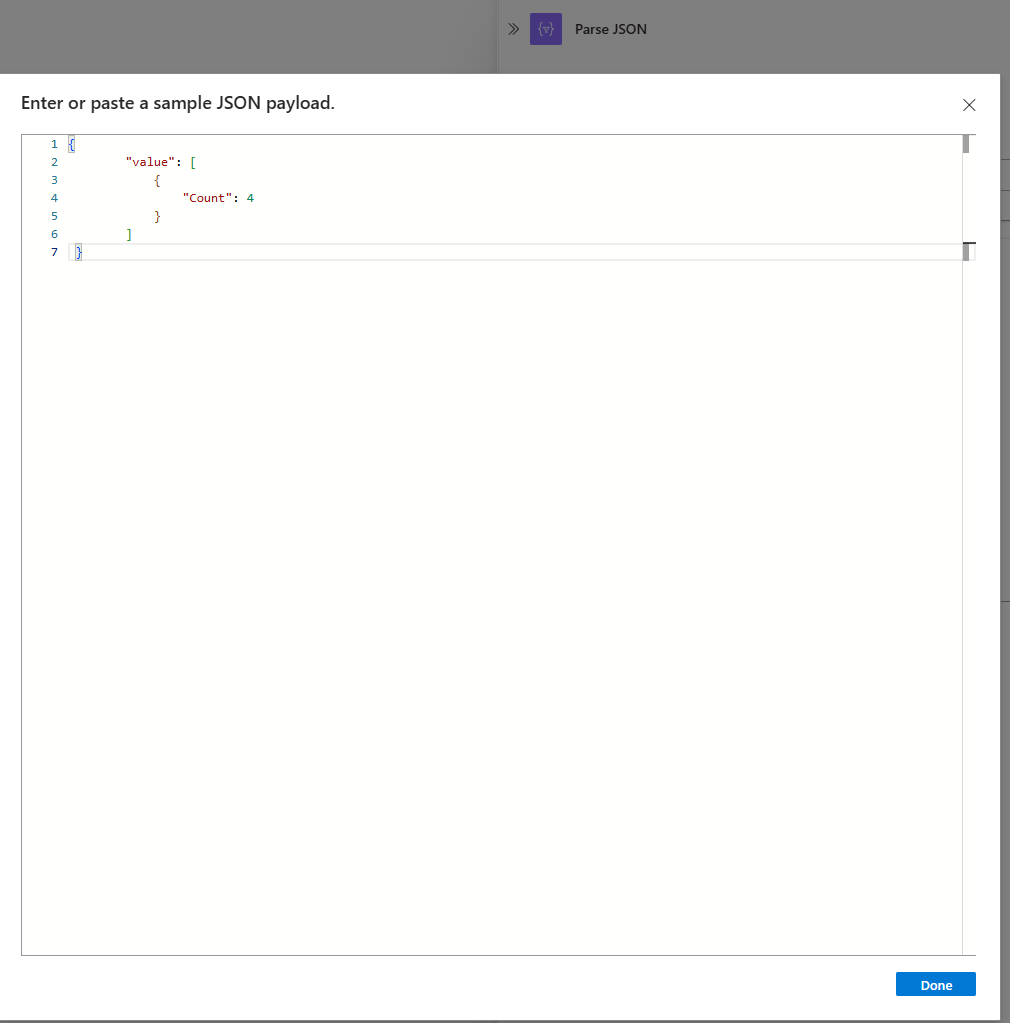

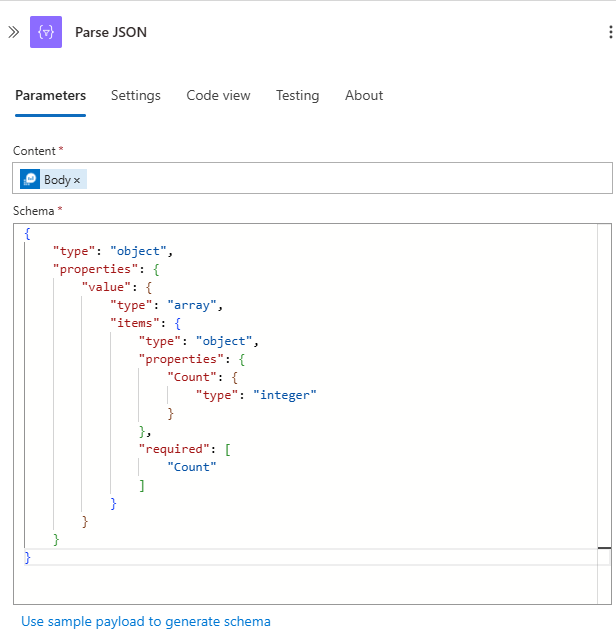

Step #6 – Extract number of files from KQL query output’s count key/value pair

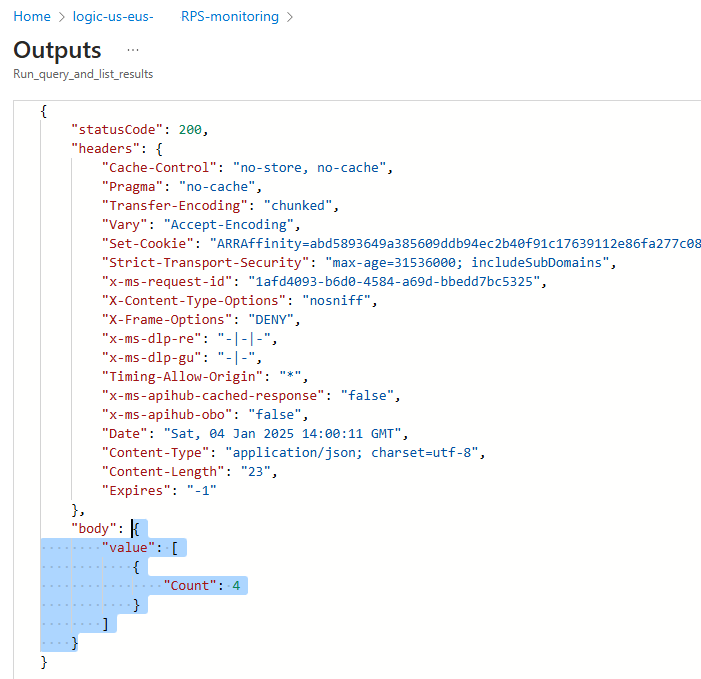

The output of the Run query and list results will be a JSON format and the value we’re interested in is the Count key/value pair:

Step #7 – Initialize variable to store number of files uploaded (count)

With the results from the Run query and list results action parsed, we can now initialize and store the amount of files returned from the query with the following function:

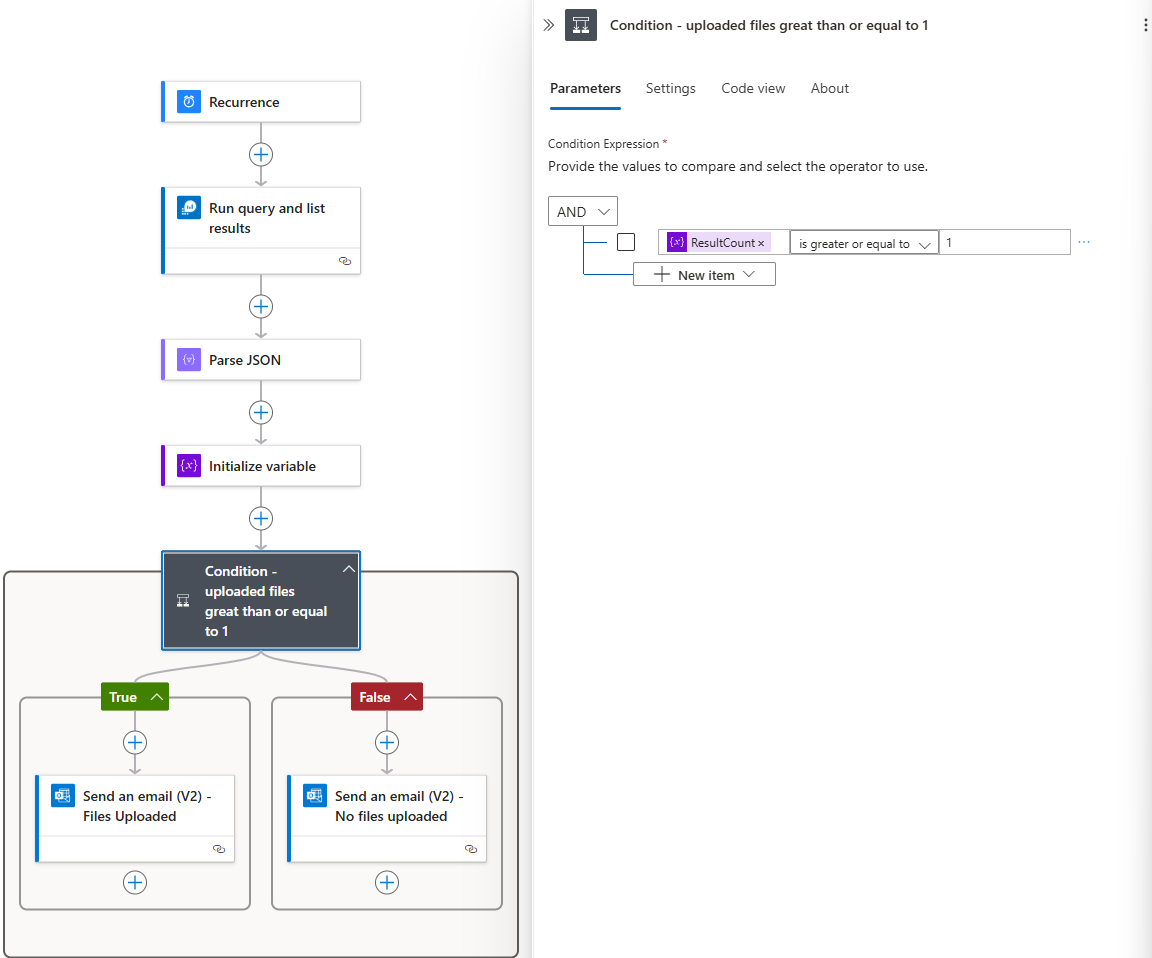

Step #8 – Determine whether files have been uploaded or not

Proceed to use a condition action to determine whether the count of files is greater or equal to 1, so we can send notifications out accordingly:

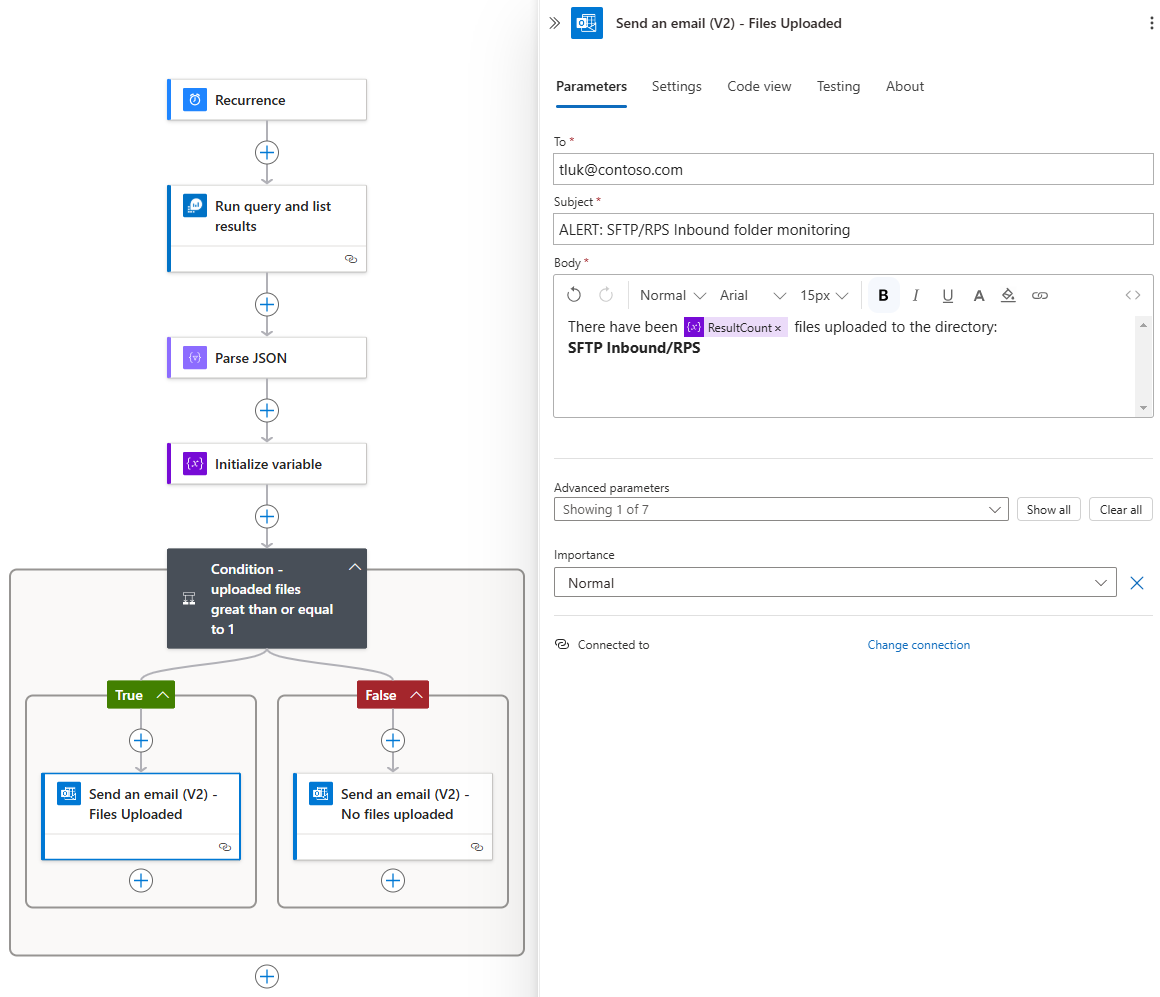

Step #9 – Use email to notify whether files have been uploaded

The last step is to set up the notification emails for either:

- Files have been uploaded

- No files were uploaded

Here is an example of the notification email for files have been uploaded:

Hope this helps anyone who may be looking for a way to monitor Azure Storage Account folders.