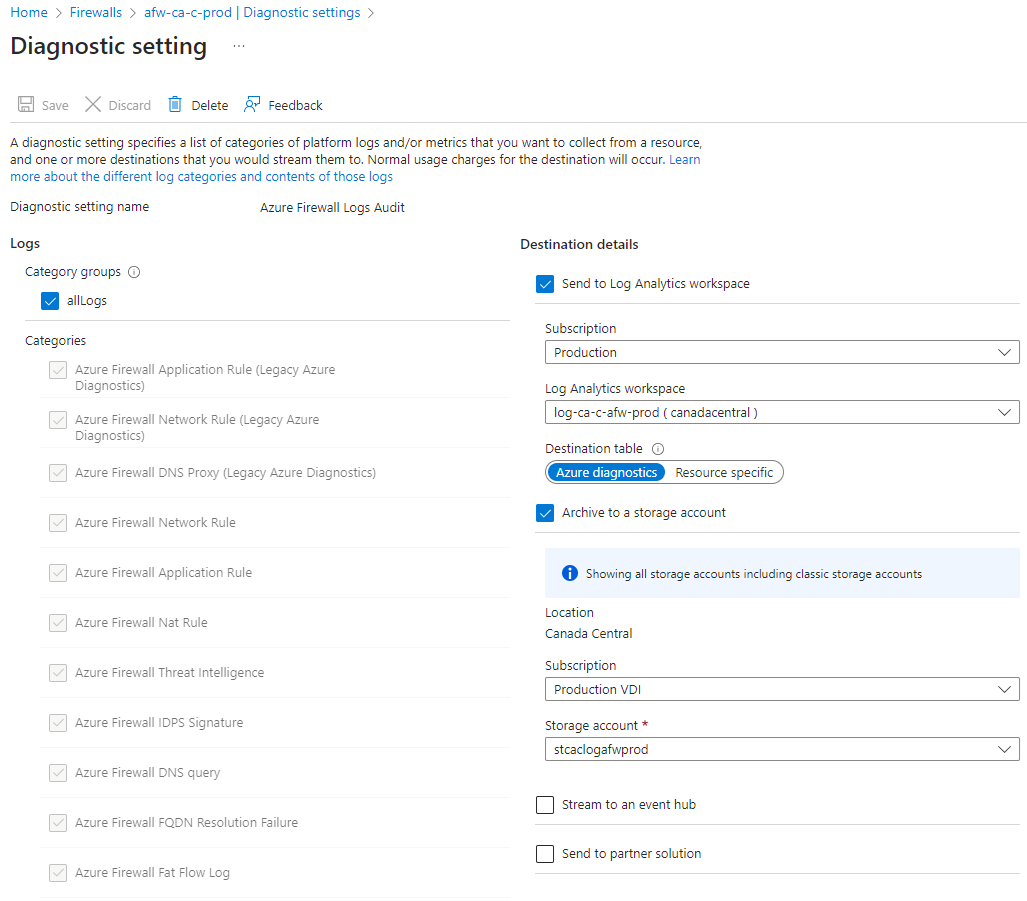

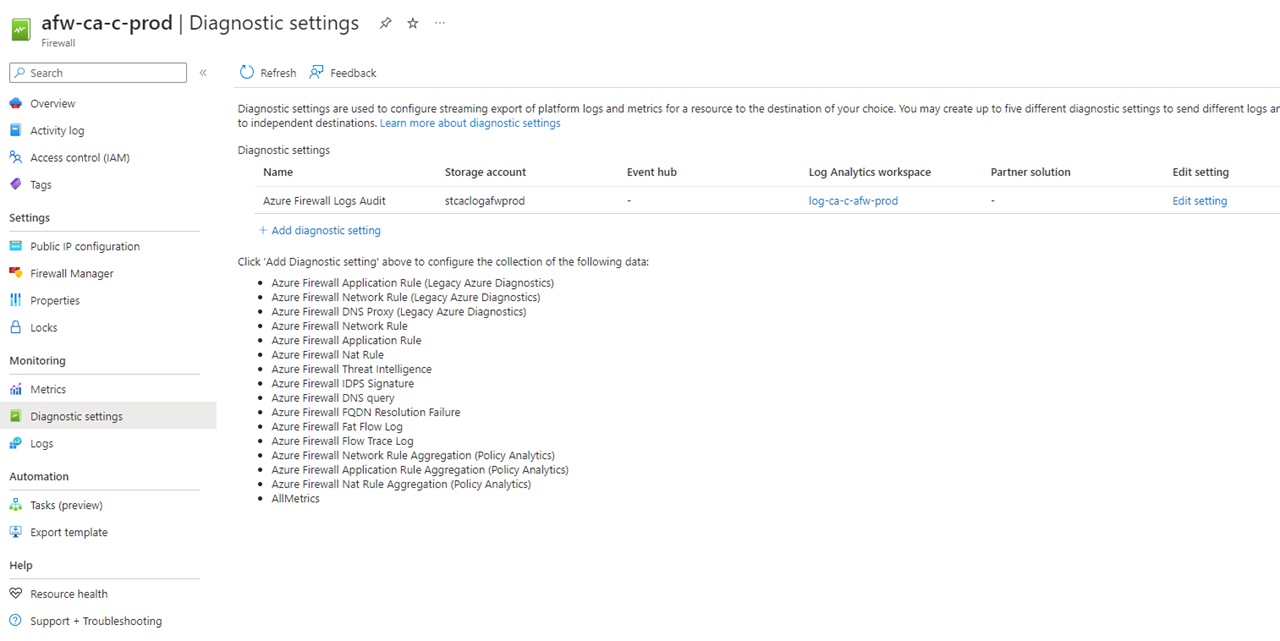

One of the clients I recently worked with had a requirement that all traffic traversing through the Azure Firewall need to be stored for at least 6 months due to auditing requirements. Accomplishing this wasn’t difficult because it was a matter of either increasing the retention for the Log Analytics Workspace or sending the log files to a storage account for archiving. Given the long period of 6 months, I opted to set the Log Analytics workspace retention to 3 months and provide the remaining retention by sending the logs to a storage account:

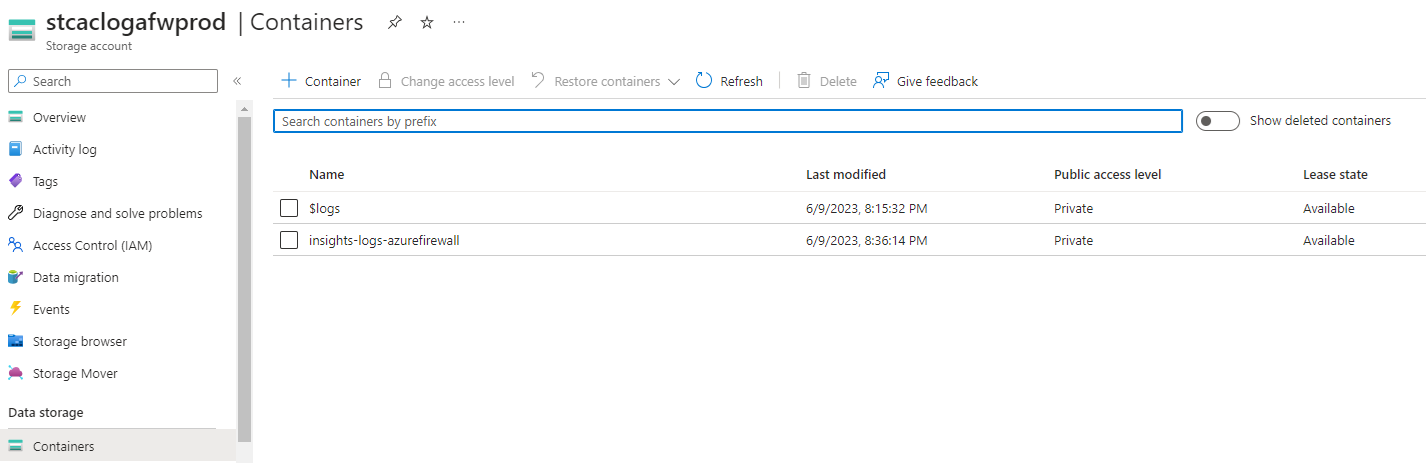

The Firewall logs that are sent to the storage account will be stored in a container named insights-logs-azurefirewall:

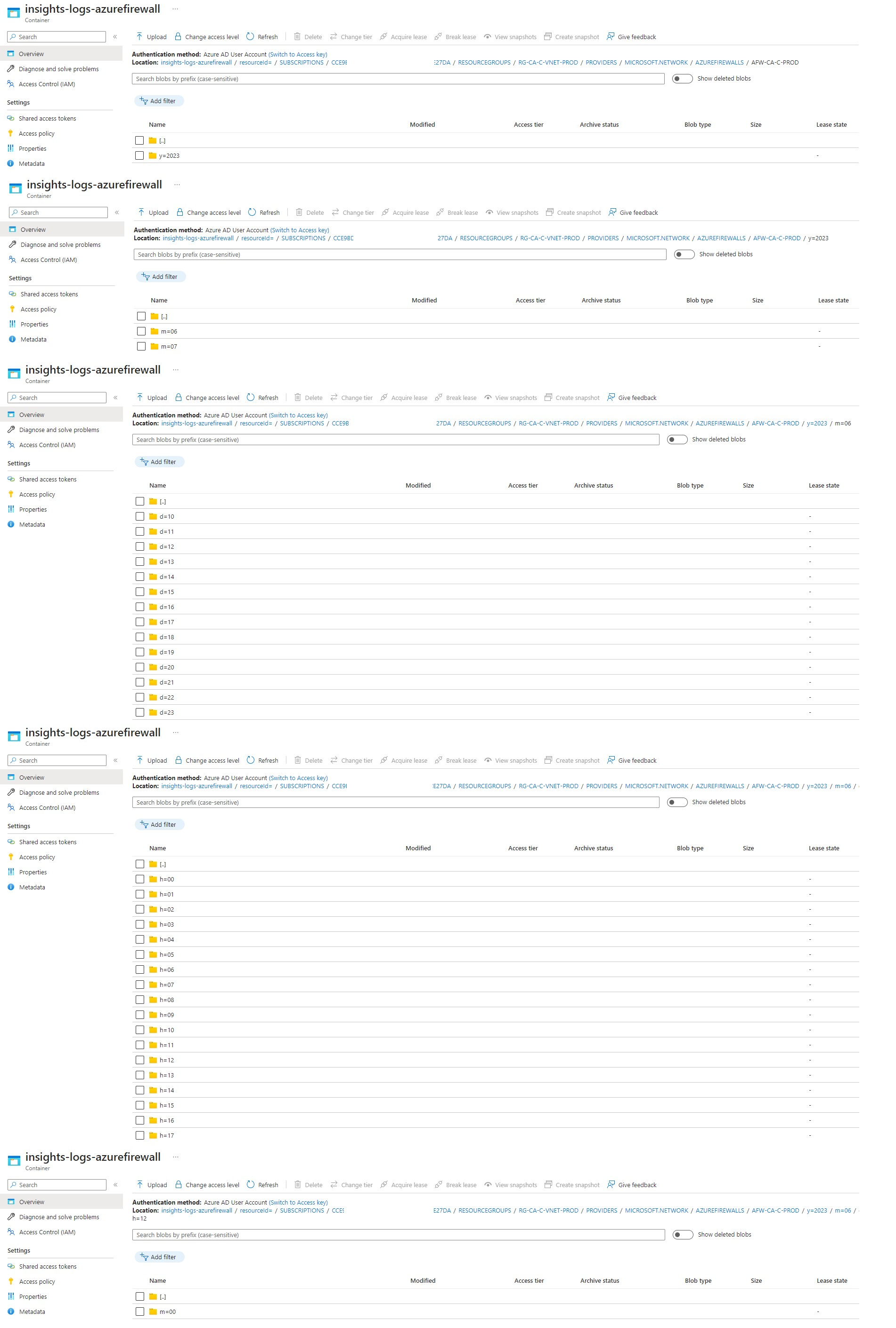

Navigating into this container will show that it a folder tree consisting of multiple subfolders containing the subscription, the name of the resource group containing the firewall, which also contains the VNet because it is a requirement to store the firewall resource in the same RG as the VNet:

insights-logs-azurefirewall / resourceId= / SUBSCRIPTIONS / CCE9BD62-xxxx-xxxx-xxxx-xxxx51CE27DA / RESOURCEGROUPS / RG-CA-C-VNET-PROD / PROVIDERS / MICROSOFT.NETWORK / AZUREFIREWALLS / AFW-CA-C-PROD

It then splits to the logs into subfolders with:

- Year

- Month

- Day

- Hour

- Minute (only 1 folder labeled as 00)

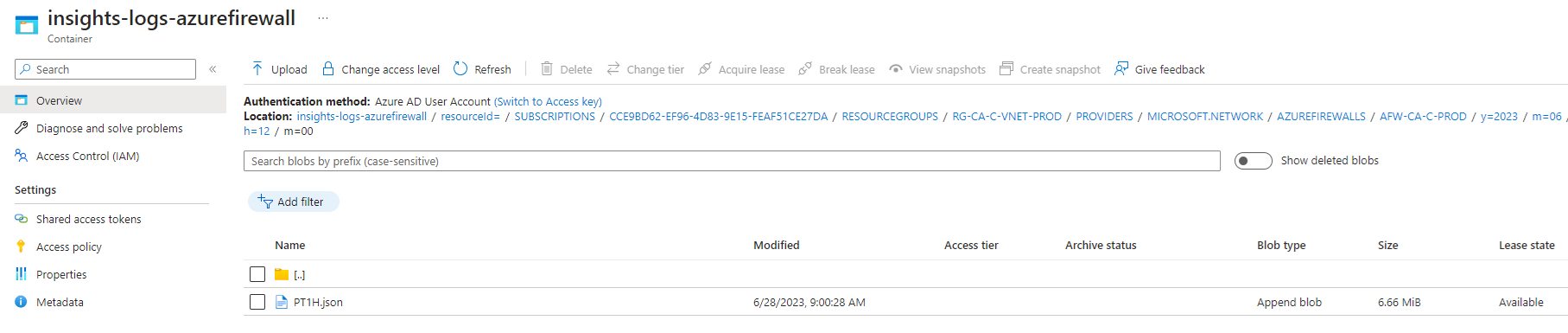

Drilling all the way down to the minute folder will contain a PT1H.json file that is the Append blob type. This is the file that will contain the firewall traffic log entries:

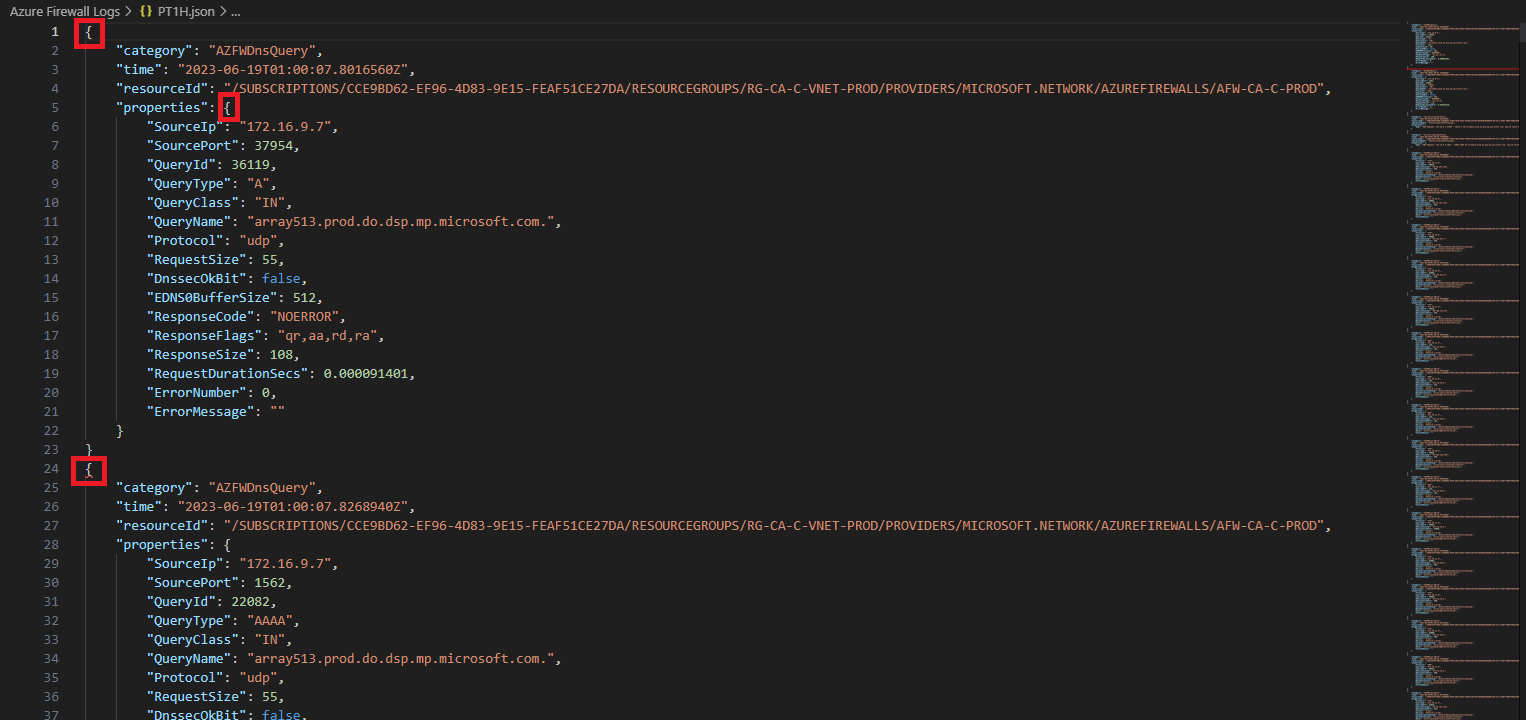

While browsing through the content of the PT1H.json file, I immediately noticed that the format of the entries did not appear to conform to any of the JSON Specifications (RFC 4627, 7159, 8259) because while I’m not very familiar with JSON format, I can see that:

- The beginning entries for the whole JSON file is missing an open square bracket and the end of the file is missing a close square bracket <- Line 1

- The nested properties values do not have an open square bracket before the brace and a close square bracket at the end of the close brace <- Line 5 and Line 22

- The close brace for each entry does not have a comma that separates each log <- Line 24

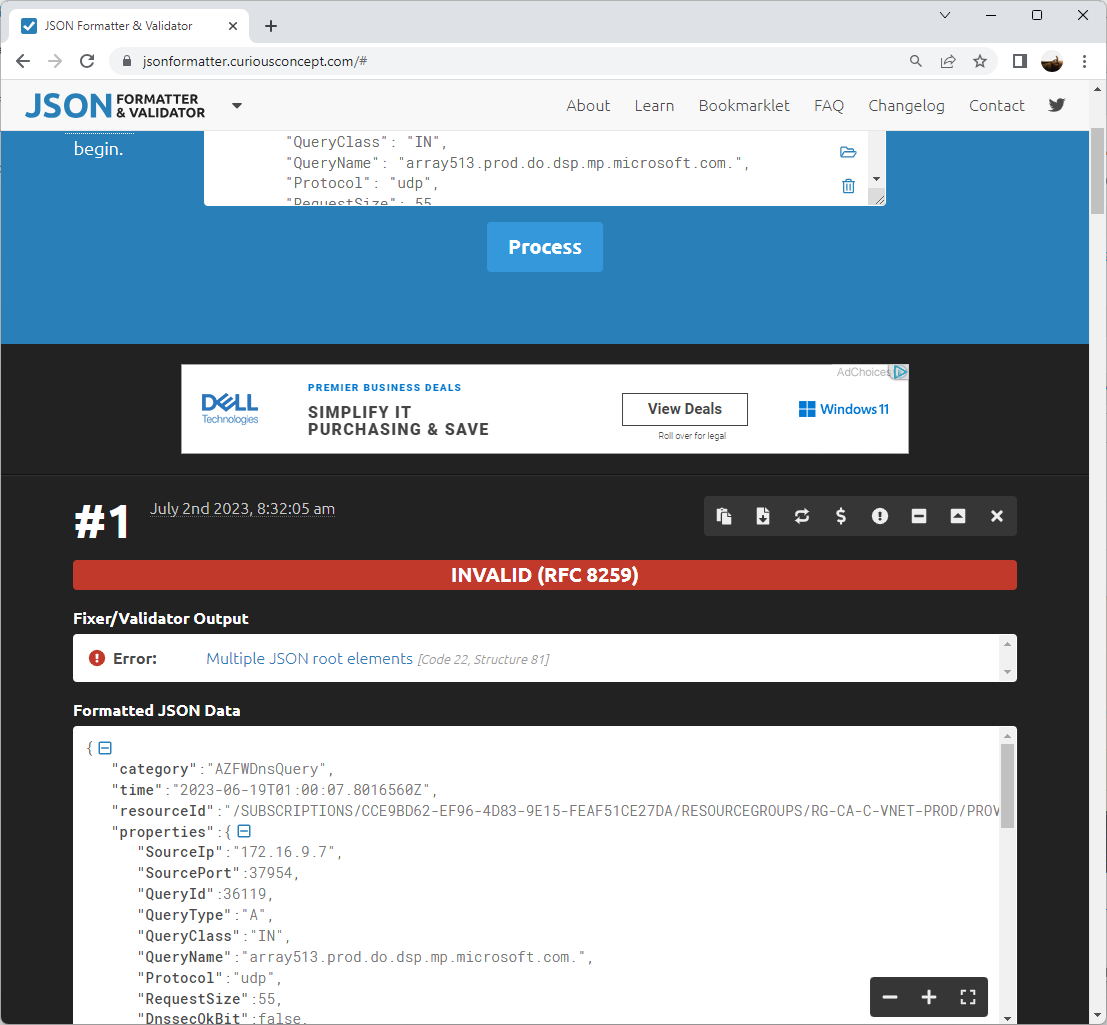

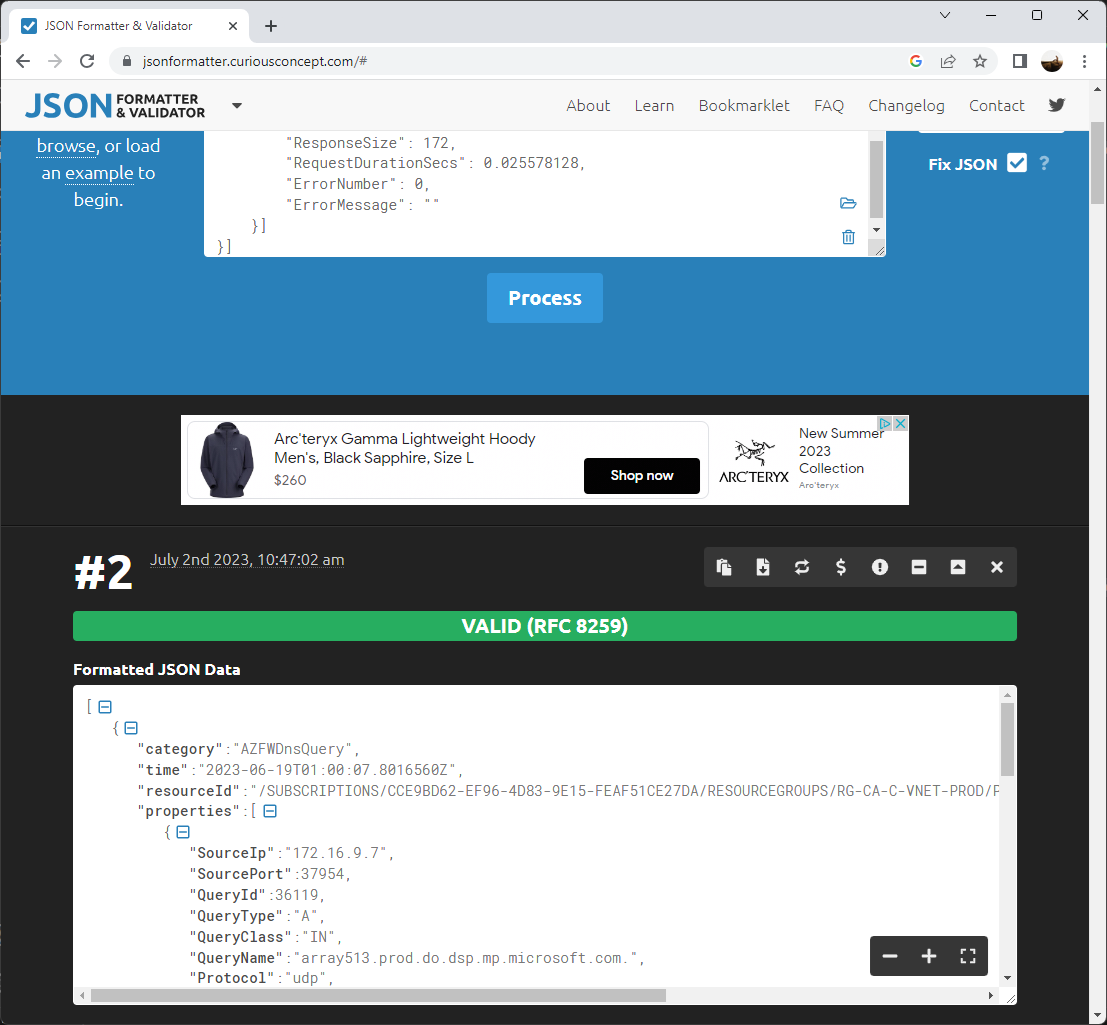

Trying to paste this into a JSON validator (https://jsonformatter.curiousconcept.com/) would show it does not conform to any RFC format:

Reviewing the Microsoft confirms that the format of blobs in a Storage Account is in JSON lines, where each record is delimited by a new line, with no outer records array and no commas between JSON records: https://learn.microsoft.com/en-us/azure/azure-monitor/logs/logs-data-export?tabs=portal#storage-account

Further reading shows that this was put in place since November 1st, 2018:

Prepare for format change to Azure Monitor platform logs archived to a storage account

https://learn.microsoft.com/en-us/previous-versions/azure/azure-monitor/essentials/resource-logs-blob-format

More reading about the JSON Lines format can be found here: https://jsonlines.org/

My objective was to simply use a PowerShell script to convert a JSON file into a CSV so it can be sent to the client for review but my script would not work with the JSON Line format. Fixing this manually by hand if there were 2 records wouldn’t be difficult, but these firewall logs have thousands of entries and I needed a way to automate the conversion. The whole process of getting this to work too quite a bit of my time so I wanted to write this blob post to help anyone who may come across the same challenge.

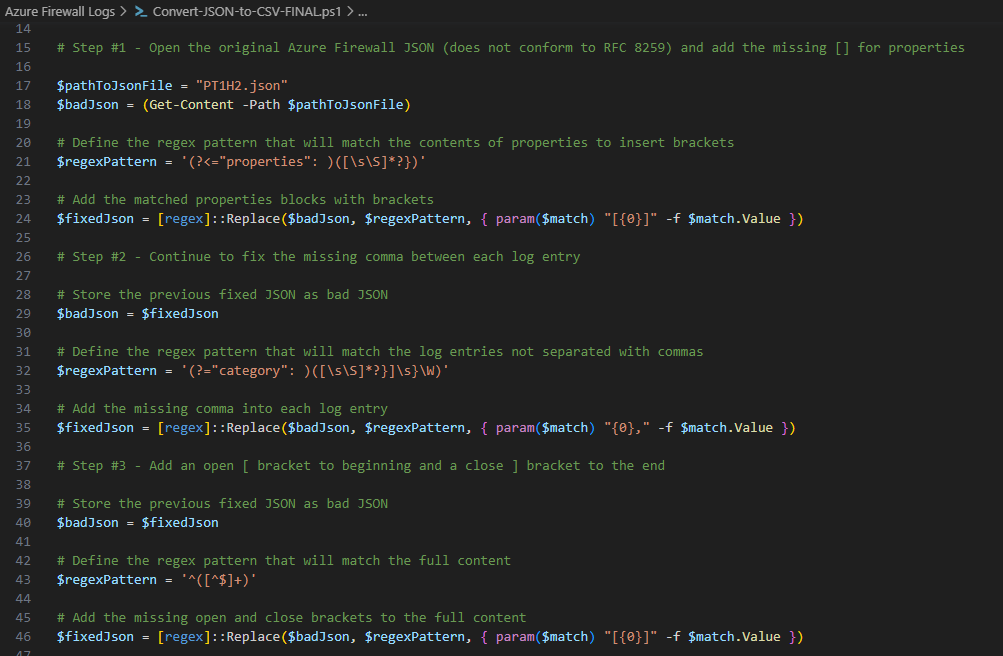

Step #1 – Fixing the poorly formatted JSON file

The first step was to fix the JSON Line formatted JSON file so it conforms to an RFC 8259 format. What this basically meant is addressing these 3 items:

- The beginning entries for the whole JSON file is missing an open square bracket and the end of the file is missing a close square bracket <- Line 1

- The nested properties values does not have an open square bracket before the brace and a close square bracket at the end of the close brace <- Line 5 and Line 22

- The close brace for each entry does not have a comma that separates each log <- Line 24

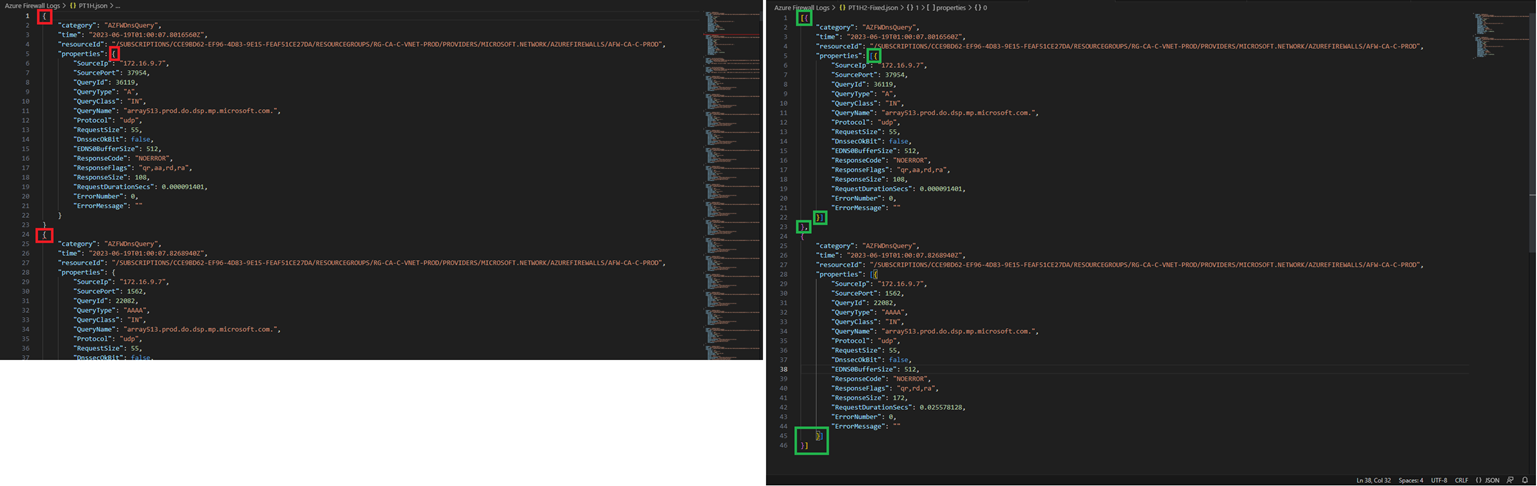

I’ve reduced the JSON file to only 2 log entries to show all the changes required:

- Add an open [ bracket after properties”:

- Add a close ] bracket at the end of properties }

- Add a comma after close } brace for each log entry but exclude last entry

- Add a bracket at the beginning of the JSON

- Add a bracket at the end of the JSON

The best way to approach this is to use Regex expressions to match the desired block or blocks of lines and add the desired brackets and/or comma. My days of using Regex goes back to when I worked on voice deployments for OCS 2007, Lync Server, Skype for Business Server, and Teams Direct Routing. My role over the past few years does not include this product so if you (the reader) see a better way of writing these expressions, please feel free to provide suggestions in the comments.

Add an open [ bracket after properties”: and add a close ] bracket at the end of properties }

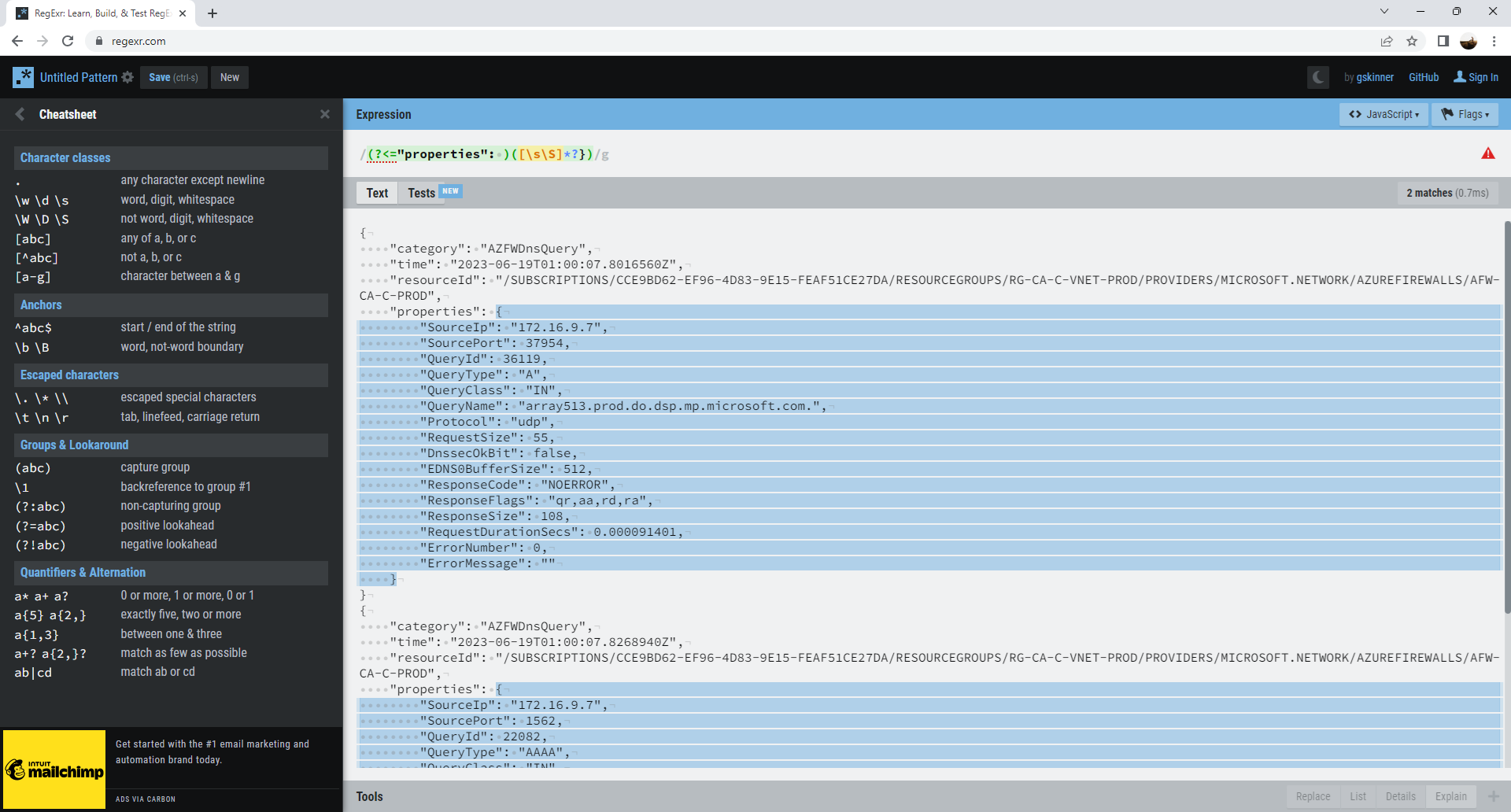

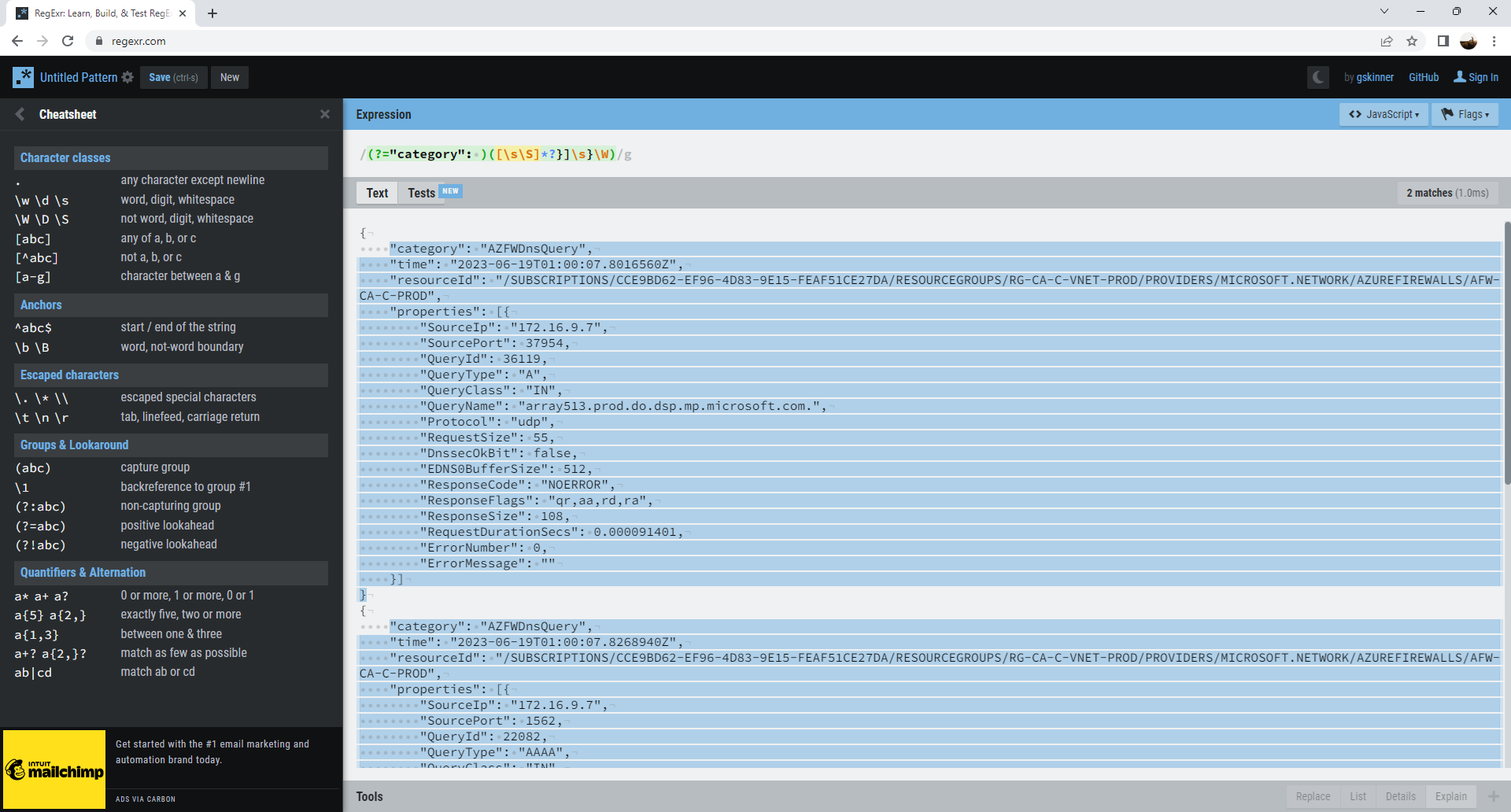

The Regex expression to match all the contents in the nested properties block is:

(?<=”properties”: )([sS]*?})

This can be validated on a Regex validator such as: https://regexr.com/

We can use the following PowerShell regex replace function to add the missing open and close square brackets:

$fixedJson = [regex]::Replace($badJson, $regexPattern, { param($match) “[{0}]” -f $match.Value })

Add a comma after close } brace for each log entry but exclude last entry

With the missing open and square brackets added, we can use the output and the following regex expression to match all of the log entries to add a comma for separation AND NOT include the last log at the end of the entries:

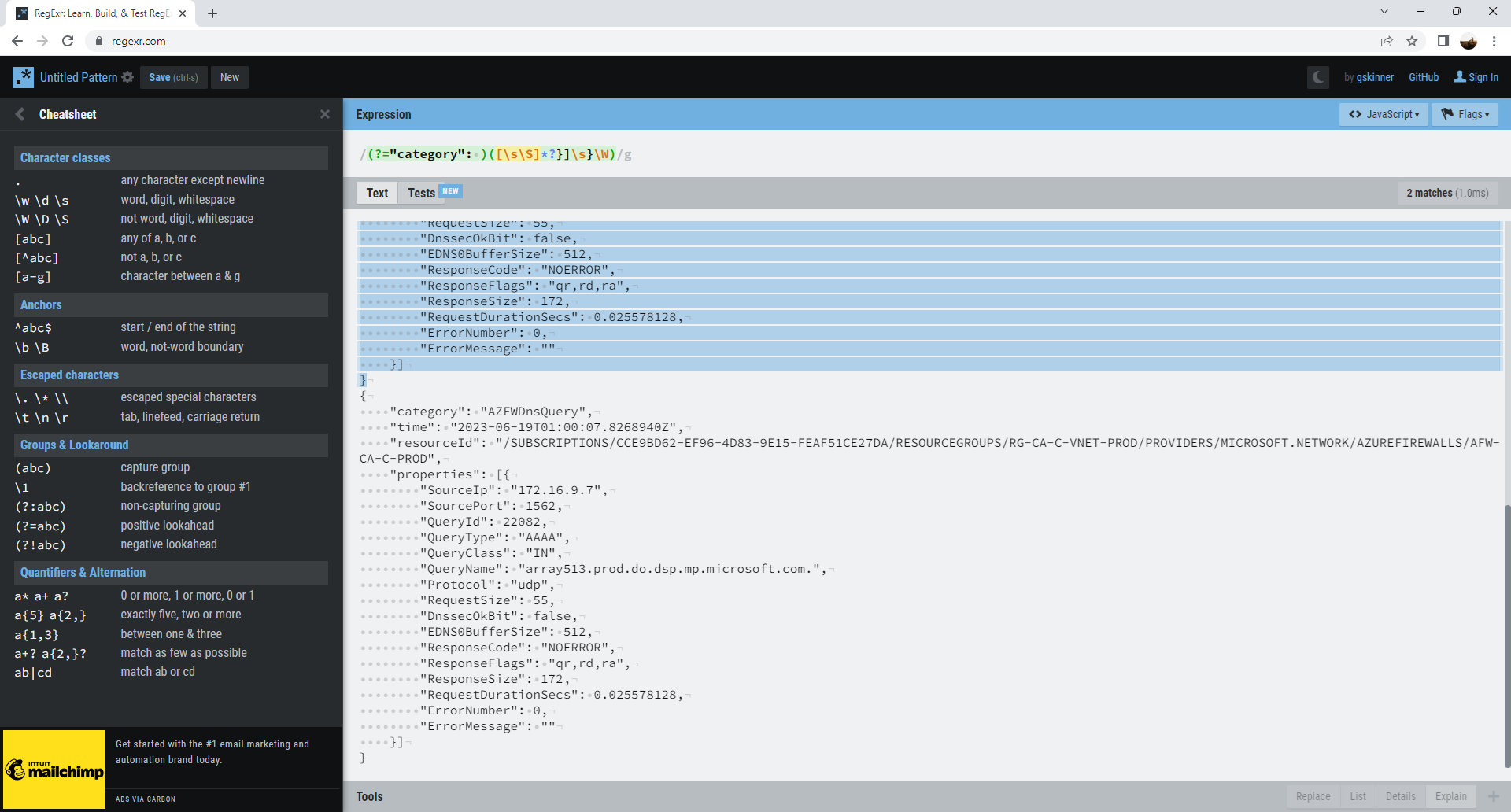

(?=”category”: )([sS]*?}]s}W)

Note that the last block for the log entry is excluded:

——————————— Update August 21-2023 —————————————

I realized that the previous RegEx expression I used would fail to match scenarios where there are spaces or line breaks between the square and curly brackets so I’ve updated the expression for the script on GitHub and adding the changes here.

(?=”category”: )([sS]*?}[sS]*?][sS]*?}W)

The following is a break down of each section of the RegEx expression:

(?=”category”: ) <– Match the first block before spanning the text after this

([sS]*?}[sS]*?][sS]*?}W) <– This is to match everything from the category and to the end

([sS]*?} <– Match everything that is a whitespace and not whitespace, words, digits and end at the curly bracket }

[sS]*?] <– Match everything that is a whitespace and not whitespace, words, digits and end at the square bracket ]

[sS]*?} <– Continue matching everything that is a whitespace and not whitespace, words, digits and end at the next curly bracket }

W) <– This excludes the last block

—————————————————————————————————————-

We can use the following PowerShell regex replace function add the missing comma between entries:

$fixedJson = [regex]::Replace($badJson, $regexPattern, { param($match) “{0},” -f $match.Value })

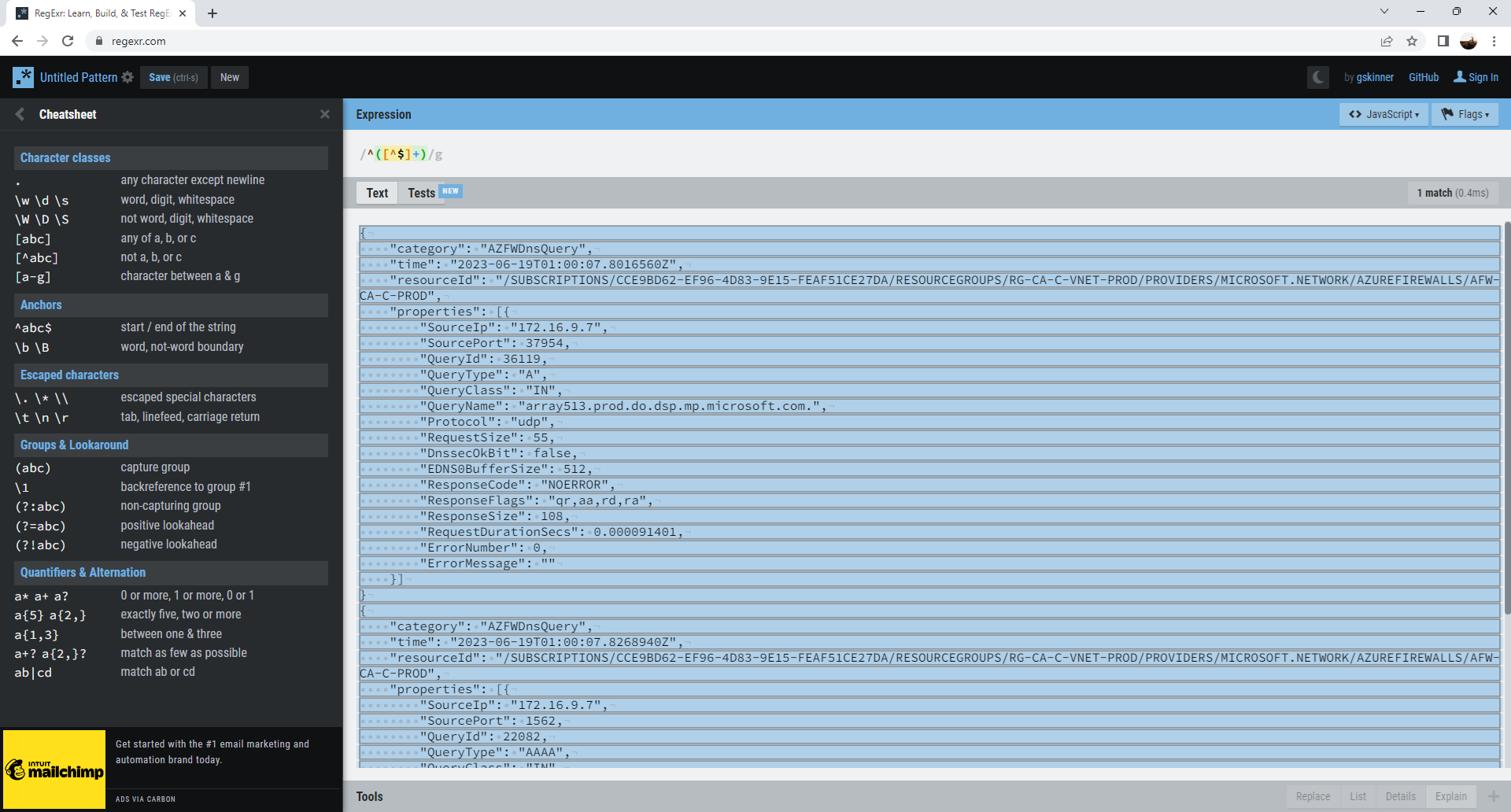

Add a bracket at the beginning of the JSON and add a bracket at the end of the JSON

With the comma added between each log, we can now proceed to add the open and close square bracket to the beginning and end of the file with the following regex expression:

^([^$]+)

We can use the following PowerShell regex replace function add the missing open and close square bracket to the beginning and end:

$fixedJson = [regex]::Replace($badJson, $regexPattern, { param($match) “[{0}]” -f $match.Value })

With the missing formatting added, we should now be able to validate the JSON file:

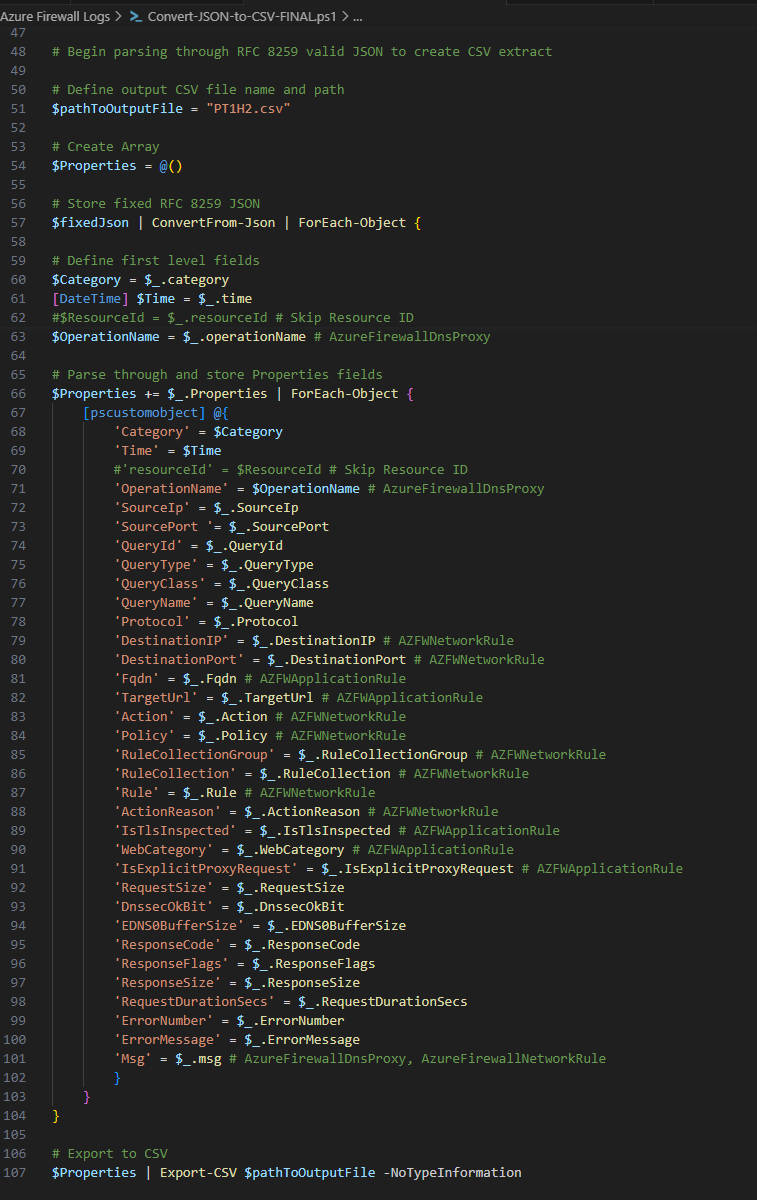

Step #2 – Create a PowerShell script that will read the Azure Firewall Storage Account JSON and convert to CSV

With the Regex expressions defined and missing brackets and braces defined, the next step is to write a PowerShell script that will read the native JSON file, format the JSON so it is RFC 8259 compliant, parse through each entry and place the log entry details into the rows and columns of the CSV file.

The script can be found in my following GitHub: https://github.com/terenceluk/Azure/blob/main/Azure%20Firewall/Convert-JSON-Logs-to-CSV.ps1

The components of the script are as follows:

1. The first portion where we use Regex to fix the JSON formatting

2. Begin parsing the formatted JSON file:

**Update the following 2 variables:

- $pathToJsonFile = “PT1H2.json”

- $pathToOutputFile = “PT1H2.csv”

When writing the portion of the code used for parsing the JSON file, I noticed that there wasn’t an easy way for me to automatically read through the column headings to avoid defining them directly because there are different type of records in the JSON file with varying headings. This meant in order to transfer all the records into a CSV, I would need to define all of the headings upfront. Since not all the headings will be used for every record, any entries that does not have the heading will have that cell blank.

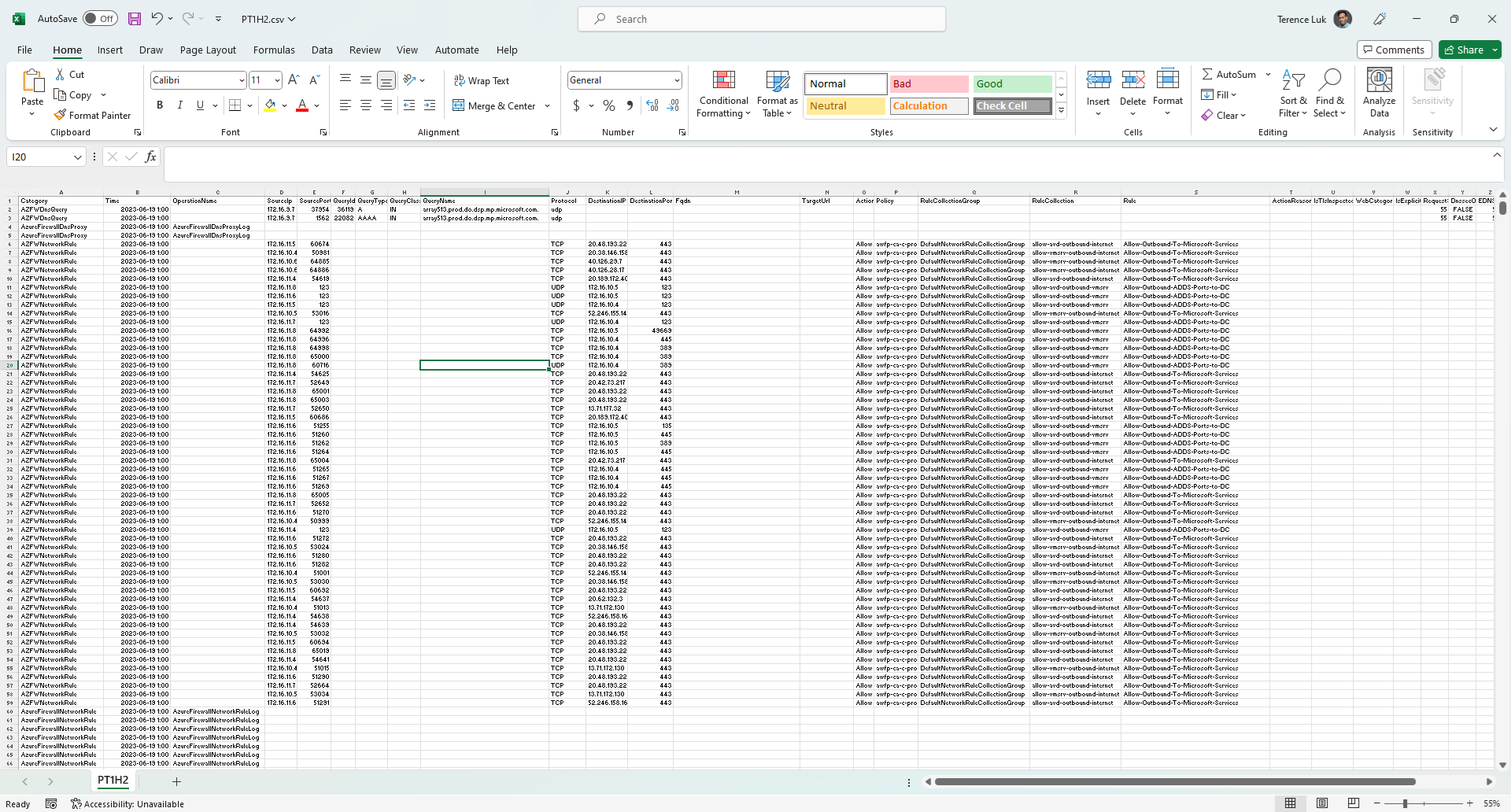

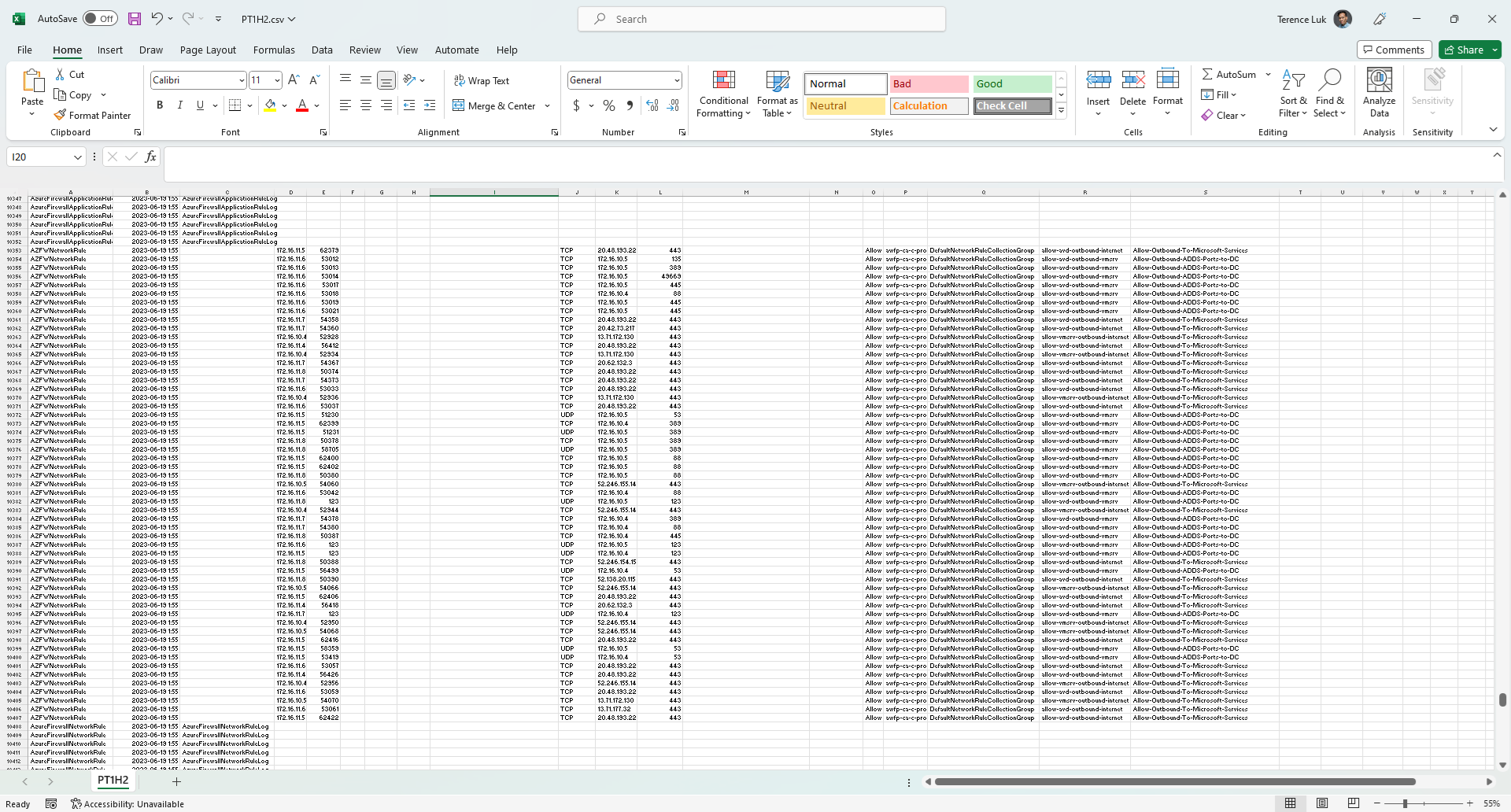

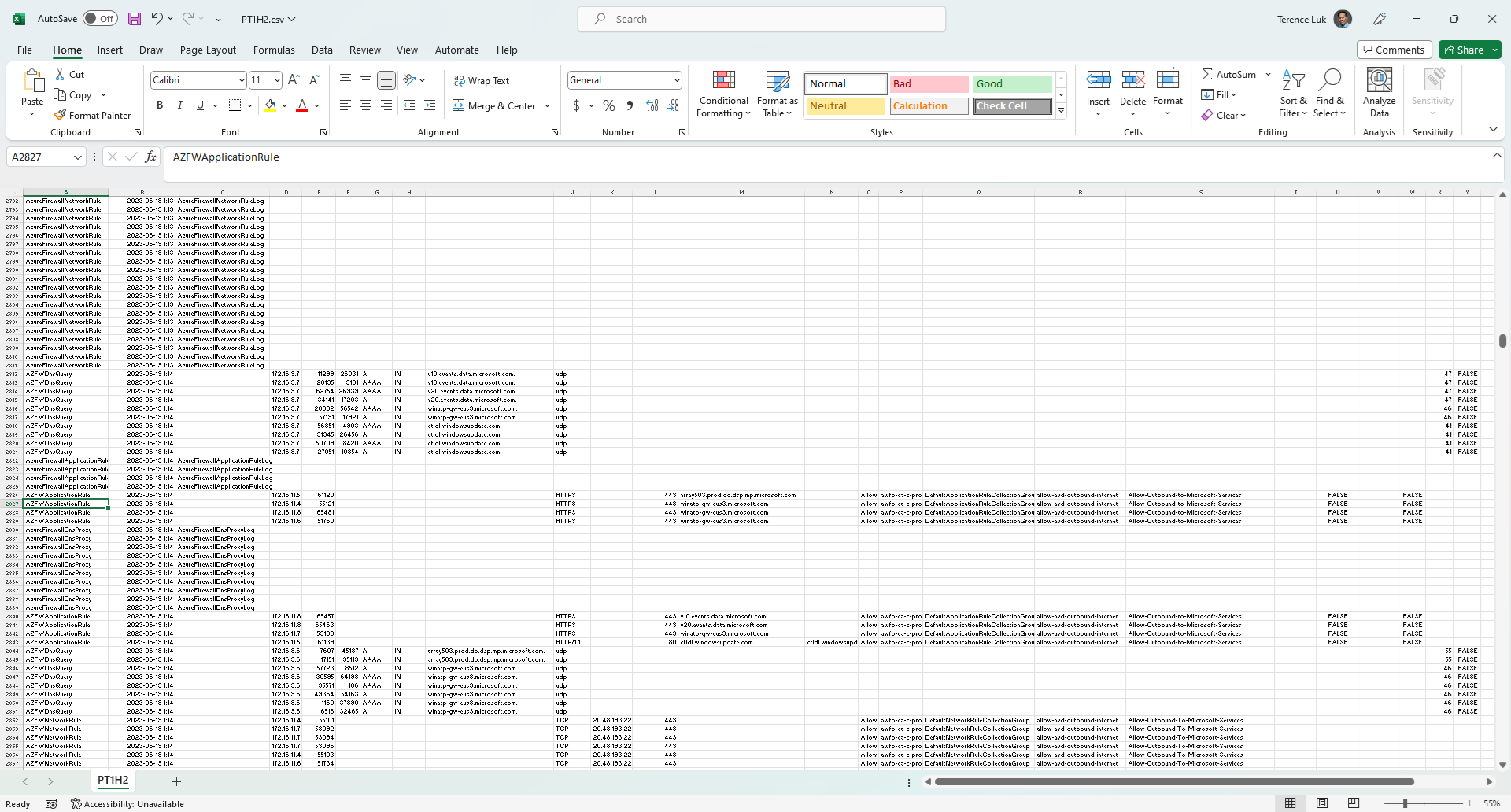

The end result of the export will look something like the following CSV:

The diagnostics settings I selected for this example included the Legacy Azure Diagnostics category so the logs will have some redundant records where the legacy entries have the details in the Msg column, while the newer category will have the record details split into their own columns.

I hope this blog post helps anyone who may be looking for a way to parse and create a CSV file from the Azure Firewall log JSON files. I’ll be writing a follow up post in the future to demonstrate using a script to read the folders in the storage account so this doesn’t have to be done manually with every JSON file at every hour of the day.