I’m not much of a data pipeline expert as the period where I spent half a year designing and configuring Synapse was over 2 years ago so when I was recently asked to look at a Data Factory issue, it took a bit of time to get that knowledge resurfaced. This problem caused me a lot of grief as I spent 18 hours glued to my computer trying to come to a resolution. Let me begin with the setup.

Environment and Problem

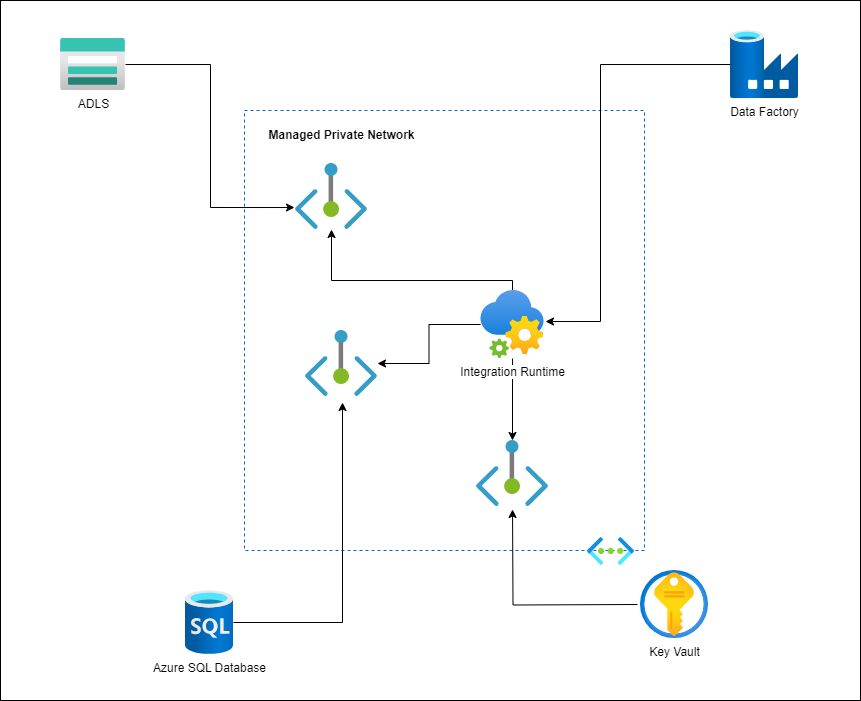

I’m a big fan of Managed Private Endpoints as it removes my dependency on the networking team to open up pathways for a Self Hosted Integration Runtime virtual machine to reach private endpoints of resources. In my scenario, the pipeline requires access to Key Vaults, Storage Accounts, and Azure SQL Databases so I naturally created a new Integration Runtime in a Managed Private Network, then created the respective Managed Private Endpoints.

What the team noticed when setting up the pipelines what that all Copy Data activities would fail with the error:

ERROR

Operation on target D365 External Transaction Processed Copy data failed: ErrorCode=DWCopyCommandOperationFailed,’Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=’Warehouse’ Copy Command operation failed with error ”Content of directory on path ‘ https://contoso.dfs.core.windows.net/landing/repo/ef5478bd-2f54-8562-9tr2-3f2c59855e2/MSSQLImportCommand/ ‘ cannot be listed.’.,Source=Microsoft.DataTransfer.Connectors.MSSQLImport,”Type=Microsoft.Data.SqlClient.SqlException,Message=Content of directory on path ‘ https://contoso.dfs.core.windows.net/landing/repo/ef5478bd-2f54-8562-9tr2-3f2c59855e2/MSSQLImportCommand/ ‘ cannot be listed.,Source=Framework Microsoft SqlClient Data Provider,’

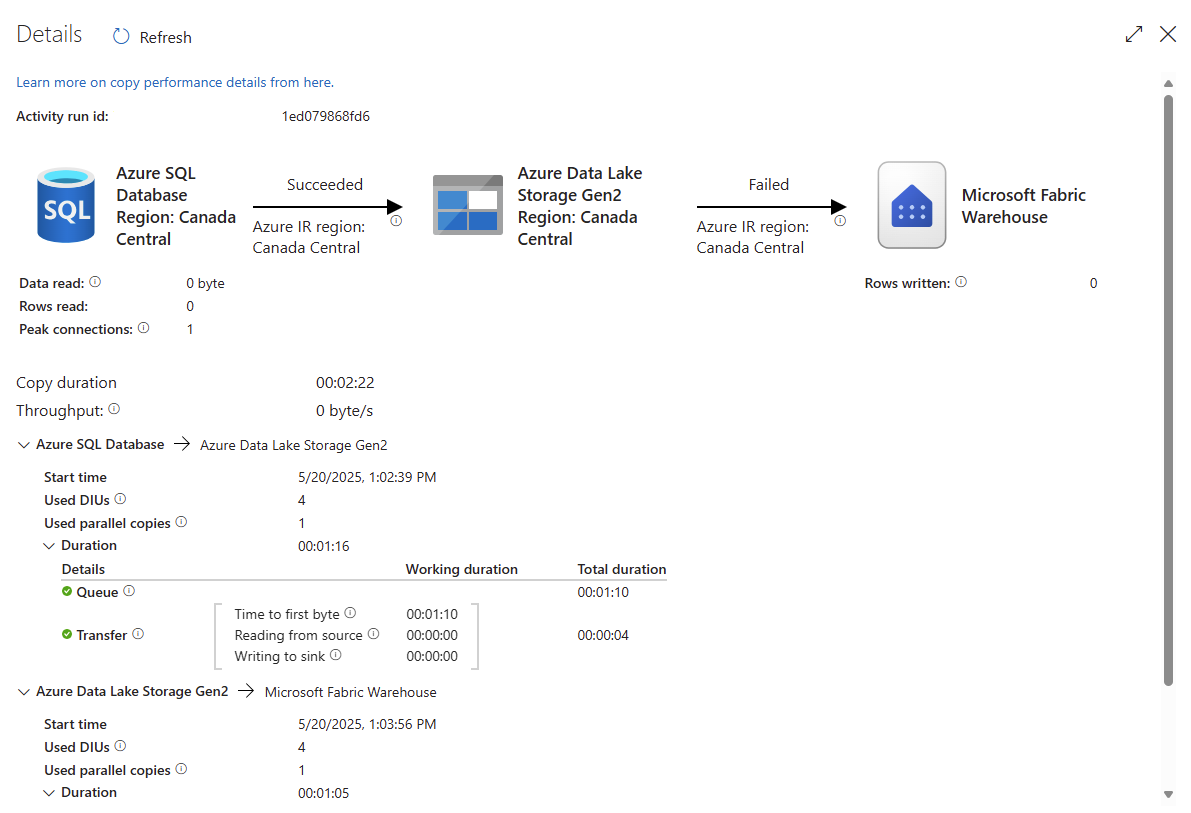

Launching the Copy Data activity displays the following:

Troubleshooting

Anyone looking at this error is going to naturally assume it is a networking error and it is but it is very misleading because even I thought it was the fact that the Integration Runtime wasn’t able to write or read to the staging area on the ADLS gen2. I began reviewing and validating items:

- Does the Data Factory’s identity have Storage Blob Data Contributor? <– This actually wasn’t relevant because staging account linked services does not support managed identities, it only supports SAS URL, Anonymous, or Account Key

- Are all of the MPEs (Managed Private Endpoints) approved?

- Is the Account Key for the storage account correct?

- Does the container exist?

- Are the folders created?

Aside from the above, I made sure all the obvious checks were completed but did identify any issues. From this point on, I kept digging deeper and deeper into the issue, and also opened up a Sev A ticket with Microsoft as I knew it would take them a bit of time to review and get close to a resolution. While waiting for the engineers to get back to me, I went ahead and tried a few other troubleshooting steps:

- Try switching to the AutoResolveIntegrationRuntime with the Storage Account configured as Enabled from all networks and was able to confirm the pipeline runs

- Switch to a Self Hosted Integration Runtime virtual machine and confirmed that the issue still persists

- Try switching to using a Storage Account blob storage as staging but the same error persists

- Try bypassing and disabling staging all together but this is not allowed for the copy job

- Try switching to Managed Identity for the ADLS but received a validation error of: Staging account linked to service “Authentication type” should be SAS URI, Anonymous or Account Key

- Try switching to the AutoResolveIntegrationRuntime with the Storage Account configured as Selected networks with the public IP address for the Data Factory region but it would still fail with the same error

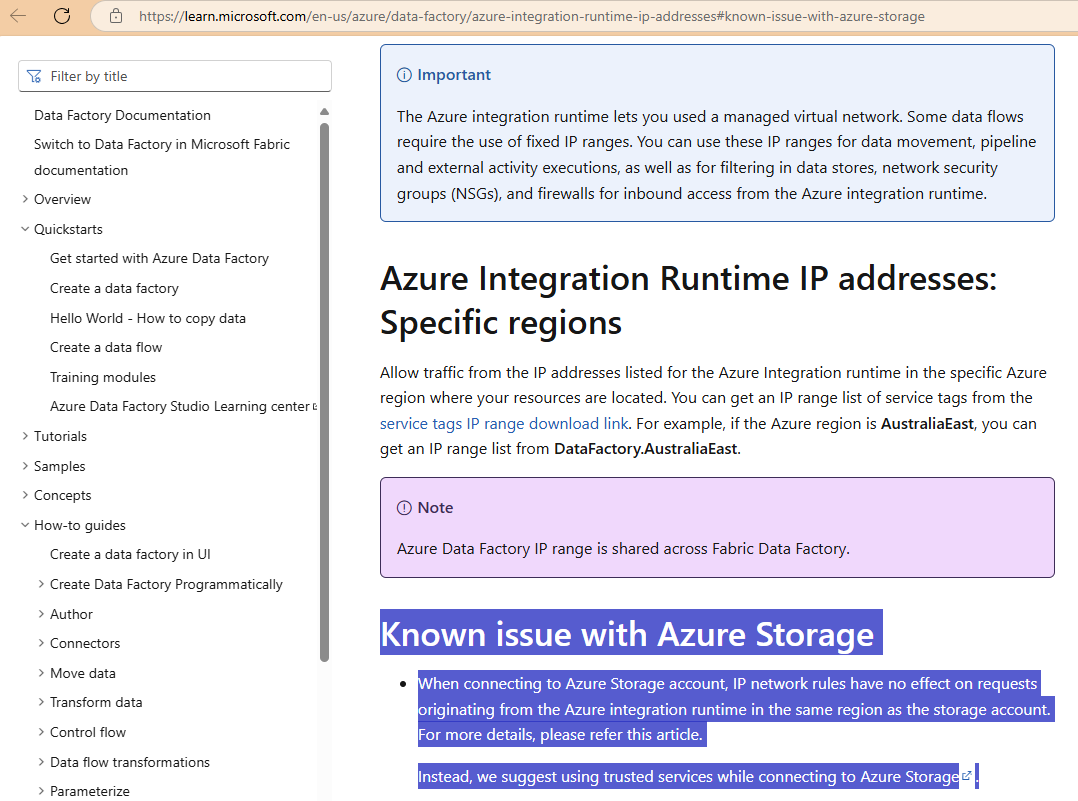

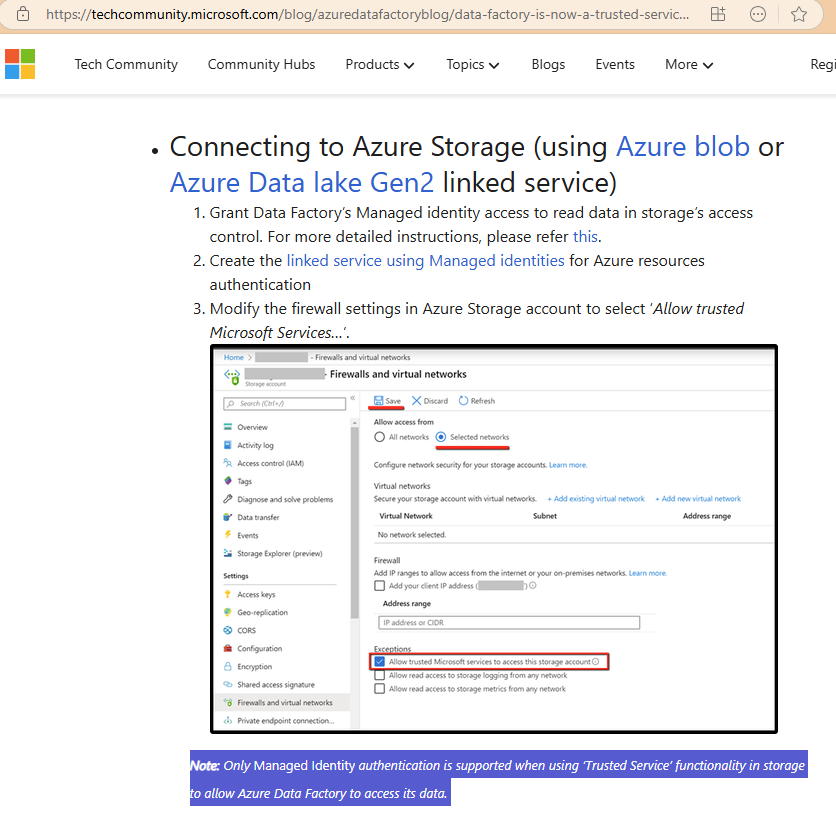

- Tried using the Allow Azure services on the trusted services list to access this storage account but the same issue still persists (more on this later)

- Try writing to another storage account in another region but the issue still persists (more on this later)

Searching through the internet did not yield much information but I was able to find 1 forum post where a person said they ended up switching the Storage Account configured as Enabled from all networks to get things to work. More troubleshooting while I waited for Microsoft brought me to the following observations:

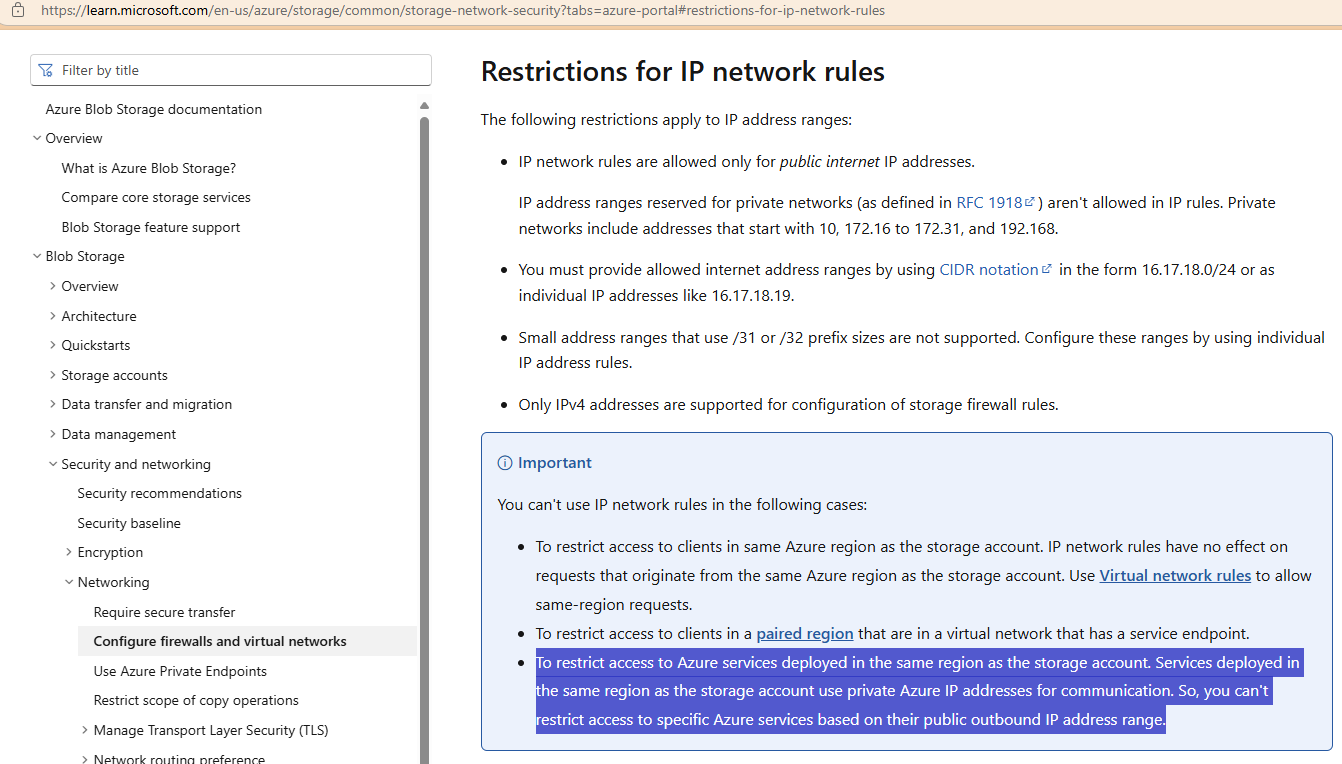

- When the Data Factory is in the same region as the Storage Account, even the AutoResolveIntegrationRuntime IR will use private IP addresses to get to the Storage Account as the diagnostic settings logs in the Log Analytics workspace indicate the IR was coming in with IP addresses such as 10.0.0.16. This means that it is not possible to use “Selected networks” because you cannot whitelist private RFC 1918 IP addresses (10.0.0.0/8, 172.16.0.0/12, and 192.168.0.0/16). Attempting to use Microsoft’s recommended Allow Azure services on the trusted services list to access this storage account. will not work because you need to use Managed Identity as Staging ADLS only allows Access Key, anonymous, or SAS.

- As indicated above, attempts to use Managed Private Endpoints or Self Hosted Integration Runtimes does not work as it fails after staging the data and then trying to upload it to Fabric.

- To circumvent the private IP address of the autoresolveintegrationruntime to the storage account, I tried write the staging data to the Canada East storage account, whitelisted the Data Factory autoresolveintegrationruntime public IP addresses and I can see it create the folders to stage the data but throws the same error message when trying to send the data to Fabric.

Here is a supporting document for IP network rules on the storage account and how private IP addresses are used by other services and you cannot add private IPs to the firewall: https://techcommunity.microsoft.com/blog/azuredatafactoryblog/data-factory-is-now-a-trusted-service-in-azure-storage-and-azure-key-vault-firew/964993

Here is a supporting document for how the IP network rules for a storage account has no effect on requests coming from a Data Factory IR in the same region and the recommendation is to use Allow Azure services on the trusted services list to access this storage account.: https://learn.microsoft.com/en-us/azure/data-factory/azure-integration-runtime-ip-addresses#known-issue-with-azure-storage

However, staging accounts used in linked services does not supported the required Managed Identity:

So giving this more thought, the avenues I wanted to try were:

- Try to break the copy job into 2:

- First copy the Azure SQL DB data to the staging ADLS account

- Then another job that copies the staging data into Fabric

- Try to use Fabric’s Data Pipeline to copy the data from Azure SQL DB to its Lake House for staging, then into the Fabric Data Warehouse

- Try to provision a private endpoint for the fabric so our integration runtime can allow us to reach it on a private network but this also wouldn’t work

Resolution

So after 10 hours of opening the ticket with Microsoft, an engineer from the APAC ADF team got back to me and rounded up a Storage Account, Networking and ADF (himself) team together to speak to me. The networking (Brian) and ADF (Tong) engineers were fantastic as I showed them my findings and perform some tests that they recommended. Tong was especially helpful as the duration he had me wait was really him reaching out to the product team to understand why I was experiencing this issue.

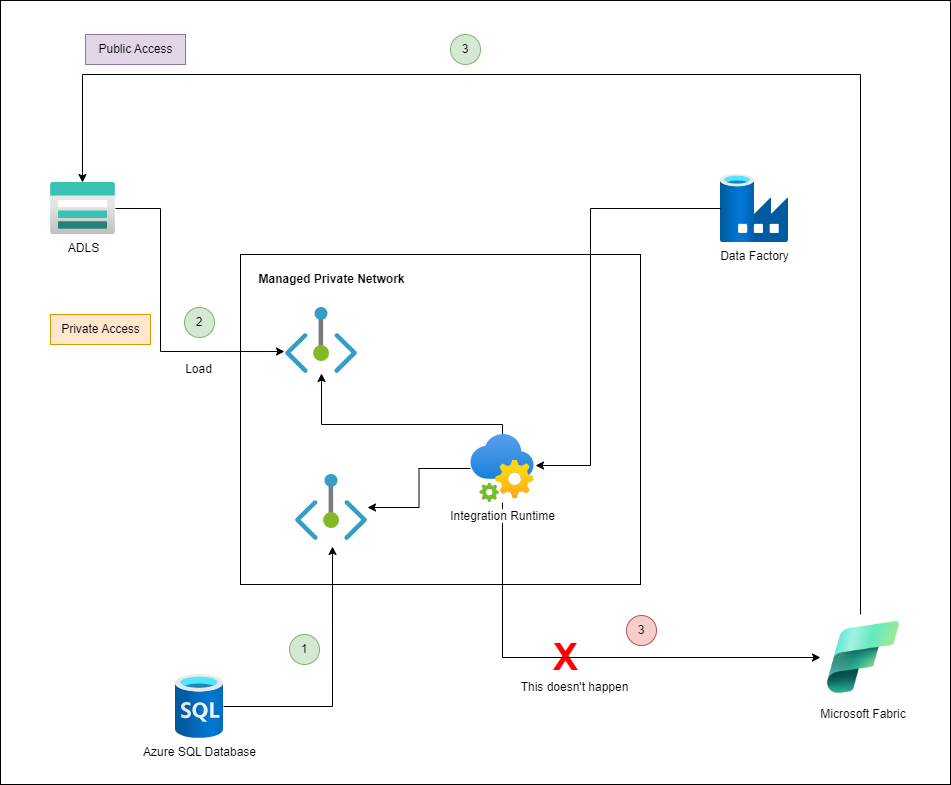

He explained the situation to me as I drew this diagram to confirm that I understand him correctly:

Tong told me that he confirmed with the product team that when the sink happens with Fabric, the traffic doesn’t actually come from the IR, it is actually the IR telling the Fabric that they should go copy the data from the ADLS. This explains why we see the error indicating:

ERROR

Operation on target D365 External Transaction Processed Copy data failed: ErrorCode=DWCopyCommandOperationFailed,’Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=’Warehouse’ Copy Command operation failed with error ”Content of directory on path ‘ https://contoso.dfs.core.windows.net/landing/repo/ef5478bd-2f54-8562-9tr2-3f2c59855e2/MSSQLImportCommand/ ‘ cannot be listed.’.,Source=Microsoft.DataTransfer.Connectors.MSSQLImport,”Type=Microsoft.Data.SqlClient.SqlException,Message=Content of directory on path ‘ https://contoso.dfs.core.windows.net/landing/repo/ef5478bd-2f54-8562-9tr2-3f2c59855e2/MSSQLImportCommand/ ‘ cannot be listed.,Source=Framework Microsoft SqlClient Data Provider,’

The IR isn’t the one throwing this message, the Fabric is. What he suggested was that we can try whitelisting the public IP addresses for the ServiceFabric.CanadaCentral IP addresses in the JSON file provided by Microsoft that lists all the outbound IP addresses into the Storage Account’s firewall to see if the sink would work. This was when I wasn’t very impressed with the quiet Storage Account engineer as I asked him whether he saw any traffic and he didn’t provide any information. I had Log Analytics enabled for the diagnostics so started querying the logs and can see the following:

- Fabric appears to be coming in with the CallerIpAddress 10.0.0.4 (UserAgentHeader is SQLBLOBACCESS) <– the StatusText shows IpAuthorizationError with StatusCode 403

- Data Factory appears to be coming in with the CallerIpAddress 10.90.0.8 (confirmed as the UserAgentHeader begins with AzureDataFactoryCopy FxVersion….) <– the StatusText shows Success with StatusCode 200 or 206

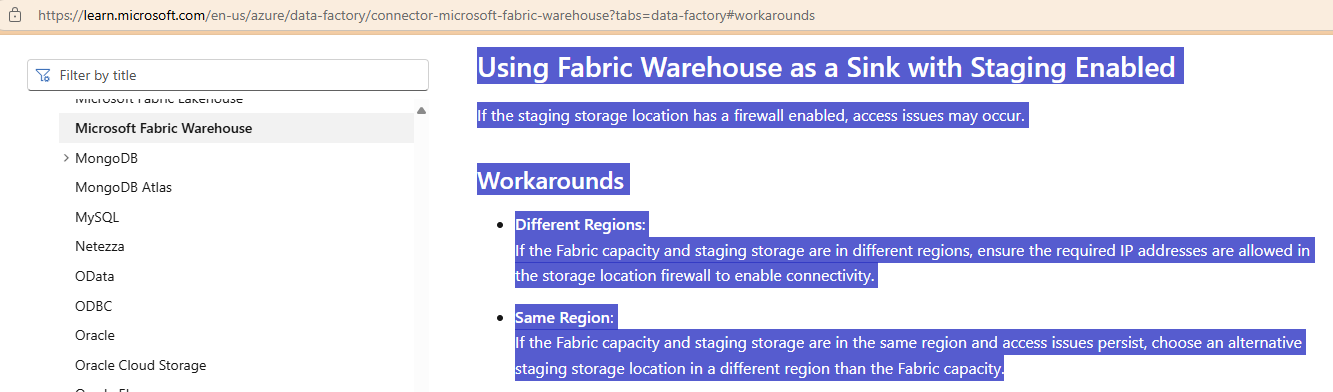

So it didn’t appear the Fabric was coming in with the public IP and we assumed that it’s because all the resources were in Canada Central. This made me think whether the storage account in Canada East would be a viable workaround so Tong said he’ll check. In 2 hours, Tong came back and provided the following documentation:

Using Fabric Warehouse as a Sink with Staging Enabled

If the staging storage location has a firewall enabled, access issues may occur.

Workarounds

- Different Regions:

If the Fabric capacity and staging storage are in different regions, ensure the required IP addresses are allowed in the storage location firewall to enable connectivity. - Same Region:

If the Fabric capacity and staging storage are in the same region and access issues persist, choose an alternative staging storage location in a different region than the Fabric capacity.

The document suggests that having the staging ADLS in another region would correct the issue so I gave this a go the next day but it didn’t work and I could not see the inbound traffic to the storage account in Log Analytics. I don’t have an update on the resolution but will update this post when I have more information.

I hope this helps anyone out there who may find themselves in the same situation as I did and nothing out on the internet is available to troubleshoot. It has been a while since I’ve ran into a situation as such but I am extremely impressed with Tong and Brian from the APAC team.